From Insights to Impact

Actionable Interpretability for Neural Machine Translation

Abstract

Neural language models have revolutionized the field of natural language processing, quickly becoming essential tools for a wide range of practical applications. Recent advances in interpretability research offered valuable insights into the inner workings of these systems, but often failed to translate into downstream improvements for users in real-world settings. This dissertation investigates the end-to-end development of interpretability methods to improve the trustworthiness and controllability of neural machine translation systems, from conception to experimentation with end users. Its findings address fundamental questions about how language models leverage contextual information, how their generation processes can be steered for personalization, and how interpretability insights can enhance professional translation practices.

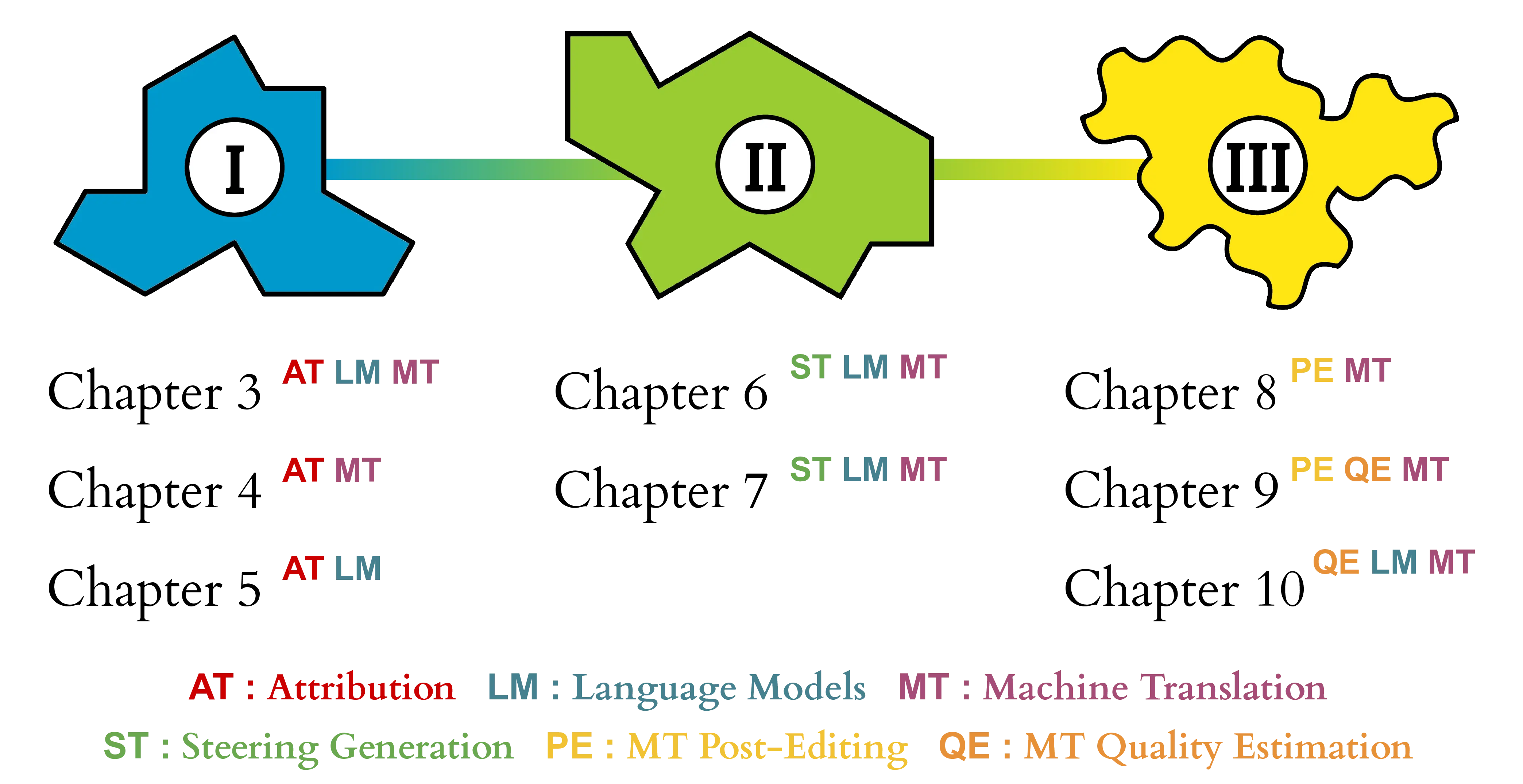

The thesis work is organized into three interconnected parts. Part I develops foundational tools and methods for understanding how language models use contextual information during generation. We begin by introducing Inseq, an open-source toolkit for interactive analysis of language model behavior, showcasing its use for gender bias detection in machine translation and activation attribution using gradient-based methods. We then design PECoRe, a framework using contrastive input attribution to quantify how language models exploit contextual information, and demonstrate its effectiveness in detecting context influence in context-aware machine translation systems. Finally, we extend PECoRe to retrieval-augmented generation, using model internals to produce faithful, efficient and high-quality citations for open-book question answering.

Part II shifts the focus of our investigation from analysis to intervention, exploring methods for controlling translation outputs through prompting-based and steering-based approaches. We first present Ramp, a retrieval-augmented prompting technique exploiting relevant examples and style labels for attribute-controlled translation. We then move to the more challenging domain of literary translation, highlighting the effectiveness of steering interventions in conditioning models’ generation by surgically altering their internal representations. In particular, we show that interpretable concepts extracted by trained sparse autoencoders can be used to mimic personal translation styles from human professional translators, and that successful prompting and steering approaches converge on similar mechanistic solutions.

Finally, Part III explores how insights from model internals can inform human editors in professional translation workflows. We begin by conducting a post-editing user study spanning six typologically diverse languages (DivEMT), showing that translation productivity gains vary dramatically across language pairs, with typological similarity being more influential than traditional quality metrics. Our second study, QE4PE, investigates how word-level error highlights impact the productivity of professional post-editors and the quality of their translations, including both supervised and interpretability-based approaches. We conclude with a broad evaluation of unsupervised quality estimation methods, showing that error detection approaches based on model internals can outperform supervised baselines, and highlighting the importance of calibration and multiple annotations to account for human label variation.

Overall, this dissertation advances the field of machine translation interpretability by developing accessible tools and methods for understanding context usage, enabling fine-grained control over translation outputs, and establishing empirical evidence for the use of model internals in professional translation workflows. These contributions, taken together, lay the groundwork for the next generation of trustworthy, controllable, and user-centered translation systems.

1 Introduction

In recent years, language models have undergone a significant transformation, going from simple research prototypes producing barely coherent text to becoming a cornerstone of modern technological infrastructure. This success stems in large part from the remarkable ability of large neural networks such as the transformer (Vaswani et al., 2017) to learn rich representations of language—and by extension, our world and society—from staggering amounts of text. Yet, the complex and deeply intertwined structure that renders these systems so powerful is also the main culprit behind their opacity. The inner workings of neural networks remain notoriously difficult to interpret, and the lack of transparency in their decision-making processes has raised serious concerns about their reliability and fairness in high-stakes applications (Rudin, 2019).

These circumstances have led to a growing interest in interpretability— a field closely aligned with the broader area of explainable artificial intelligence (XAI), which seeks to develop methods and tools to understand how neural networks work and provide insights into their decision-making processes (Doshi-Velez and Kim, 2017; Li et al., 2022). In natural language processing (NLP), interpretability research has made significant strides by uncovering how language models encode and process factual knowledge and linguistic information (Tenney et al., 2019; Belinkov, 2022; Meng et al., 2022), revealing their use of context during generation (Clark et al., 2019; Ferrando et al., 2022) and identifying the learned mechanisms underlying their capabilities (Elhage et al., 2021; Saphra and Wiegreffe, 2024).

While interpretability insights have earned broad recognition and influence within the NLP research community (Mosbach et al., 2024), critics have often pointed out that these findings rarely translate into actionable improvements for real-world systems (Räuker et al., 2023; Rai et al., 2024; Hendrycks and Hiscott, 2025). Most interpretability work today focuses on identifying subnetworks and mechanisms responsible for specific tasks inside language models (Ferrando et al., 2024; Sharkey et al., 2025), yet few studies have put interpretability insights in relation to end-users’ needs and desires (Ehsan et al., 2021), despite their crucial role in determining the practical usefulness of interpretability findings (Ehsan et al., 2024). This disconnect stems from a fundamental divide between research communities: most AI interpretability researchers pursue theoretical understanding of complex systems, while human-computer interaction (HCI) researchers prioritize actionable insights and practical applications.

A prime example of this disconnect can be found in the field of machine translation (MT), a long-standing area of research within NLP. MT researchers pioneered the use of neural language models for sequence generation tasks (Sutskever et al., 2014; Bahdanau et al., 2015), and were among the first to analyze their inner workings (Belinkov et al., 2017; Voita et al., 2019; Rogers et al., 2020). Yet, despite the significant progress in the performance of MT systems across hundreds of languages over the past decade, the field has been remarkably slow to bring interpretability insights to the users of these systems, especially in the case of professional translators who work with these systems on a daily basis. Users of “classic” translation tools such as Google Translate are, to this day, simply presented with translations, without the possibility to personalize their tone or properties, quantify the model uncertainty in its response, or identify potential errors or alternative formulations. At the other extreme, when large language models like GPT-4 (OpenAI, 2023) eagerly offer eloquent justifications alongside their translations, these explanations may sound plausible but often fail to reflect the model’s actual processing and context usage, resulting in plausible yet unfaithful rationalizations (Turpin et al., 2023).

This dissertation aims to bridge the gap between method-centric interpretability research and outcome-centric real-world machine translation applications. We develop novel methods to understand and control language model generation, then study how to integrate these advances effectively into human translation workflows. Our research spans three interconnected macro-themes: (1) understanding how language models exploit contextual information during generation, (2) controlling model generation for personalized translation outputs, and (3) integrating interpretability insights into human translation workflows. Our methodological contributions, empirical evaluations, and user studies demonstrate how insights from interpretability research can lead to meaningful impact in the way machine translation systems are used in real-world translation workflows.

1.1 Outline and Contributions

The experimental chapters of this dissertation are organized into three parts, each addressing one of the research directions outlined above. Each part is composed of multiple chapters, each presenting a self-contained contribution or study related to the overarching theme. Figure 1.1 provides a visual overview of parts and chapters, highlighting for each chapter the topics introduced in detail in Chapter 2. Below, we summarize the contents, research questions and contributions of each part.

Part I: Attributing Context Usage in Multilingual NLP

Part I establishes the foundational infrastructure and methodological frameworks for understanding how neural language models and machine translation systems process contextual information during generation. We begin with Inseq (Chapter 3), a toolkit that democratizes access to interpretability analyses of generative language models, providing the foundation for our investigations into context usage. Then, Chapter 4 introduces PECoRe, a data-driven framework for quantifying the plausibility of context usage in language models through the contrastive identification of context-sensitive tokens and contextual cues that influence their prediction. PECoRe is used to study context usage in context-aware machine translation systems, identifying failure cases stemming from an incorrect usage of context. Chapter 5 extends this analysis to modern large language models and retrieval-augmented generation settings with Mirage, adapting the PECoRe framework to demonstrate how model internals enable faithful answer attribution in question answering. This part addresses two fundamental research questions:

Part I’s primary contributions include: (1) two open-source releases of the Inseq interpretability library; (2) the contrastive attribution tracing (CAT) method, a gradient-based alternative to causal intervention for efficiently identifying salient model components; (3) the PECoRe framework for context reliance attribution in language models, enabling data-driven exploration of context usage patterns in context-aware MT systems; and (4) an extended evaluation of context attribution for retrieval-augmented generation using Mirage, producing high quality citations of retrieved documents while ensuring greater faithfulness to the model’s reasoning process.

Part II: Conditioning Generation for Personalized Machine Translation

Part II moves from understanding context usage to actively controlling model generation for customized translation outputs. Across two chapters, we explore two paradigms to condition machine translation outputs—prompting-based methods and direct interventions in model processing—addressing the question:

Chapter 6 pioneers the usage of prompting-based strategies for attribute-controlled translation, while Chapter 7 connects generation conditioning to interpretability techniques, expanding the scope of our analysis from simple attributes in common domains to sophisticated personal styles in the challenging literary translation domain.

The core contributions of Part II include: (1) Ramp, a novel prompting methodology achieving strong performance in attribute-controlled translation across multiple languages and attributes without model fine-tuning; (2) the first comprehensive comparison of prompting versus interpretability-based steering for machine translation personalization; (3) a novel contrastive steering method using sparse autoencoder latents to achieve personalization accuracy comparable to prompting while preserving quality in literary translation; and (4) evidence that prompting and steering methods converge to similar mechanistic solutions, revealing fundamental principles of generation conditioning.

Part III: Interpretability in Human Translation Workflows

Part III evaluates how interpretability insights can benefit human professionals who edit machine-translated content in a practical sense. We begin with DivEMT (Chapter 8), a study investigating the effectiveness of professional MT post-editing across a diverse set of mid-resourced languages, going beyond the one-size-fits-all analysis of high-resourced translation directions. This allows us to establish our human evaluation setup, providing valuable insights into the question:

Building upon these insights, our second large-scale study QE4PE (Chapter 9) investigates how word-level error span highlights—including those derived from MT systems’ uncertainty during generation—impact the productivity of professional translators and the quality of post-edited contents:

Chapter 10 concludes our human-centered investigation with a deeper analysis of multiple uncertainty and interpretability-based word-level quality estimation methods. Such analysis allows us to assess how the performance of such techniques varies across different models, languages and human annotators:

Part III contributions include (1) DivEMT, a cross-lingual post-editing dataset enabling controlled comparison of translator productivity across editing modalities and typologically diverse languages; (2) evidence that MT quality metrics fail to correlate with human post-editing productivity across languages, with productivity being heavily influenced by source-target language relatedness; (3) QE4PE, a comprehensive post-editing dataset containing error spans, behavioral editing metrics, and quality annotations from 42 professional post-editors for two translation directions; (4) evidence that error span highlights may reduce productivity but improve critical error detection; and (5) evidence that unsupervised quality estimation methods based on model internals can match state-of-the-art supervised approaches in both accuracy and downstream usability, revealing how subjective editing choice impact the evaluation of error span detection methods.

1.2 Scientific Output

This dissertation is the product of several research articles and open-source projects, which are categorized in the following sections.

1.2.1 Main Publications

The following articles represent the main contributions reflected in this thesis’ experimental chapters, organized in their respective parts:1

Introduction and Background

- Ferrando, J., Sarti, G., Bisazza, A. and Costa-jussà, M. R. (2024). A Primer on the Inner Workings of Transformer-based Language Models. Arxiv Preprint (Chapter 2)

Part I: Attributing Context Usage in Multilingual NLP

Sarti, G., Feldhus, N., Sickert, L., van der Wal, O., Nissim, M. and Bisazza, A. (2023a). Inseq: An Interpretability Toolkit for Sequence Generation Models. In Proc. of the 61st Annual Meeting of the Association for Computational Linguistics (ACL Demo) (Chapter 3)

Sarti, G., Feldhus, N., Qi, J., Nissim, M. and Bisazza, A. (2024d). Democratizing Advanced Attribution Analyses of Generative Language Models with the Inseq Toolkit. In Proc. of the 2nd World Conference on eXplainable Artificial Intelligence: Late-breaking works and demos (xAI Demo) (Chapter 3 and Chapter 4)

Sarti, G., Chrupała G., Nissim, M. and Bisazza, A. (2024c). Quantifying the Plausibility of Context Reliance in Neural Machine Translation. In Proc. of the 12th International Conference on Learning Representations (ICLR) (Chapter 4)

Qi, J.\(^\dagger\), Sarti, G.\(^\dagger\), Fernández, R. and Bisazza, A. (2024). Model Internals-based Answer Attribution for Trustworthy Retrieval-Augmented Generation. In Proc. of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP) (Chapter 5)

Part II: Conditioning Generation for Personalized Machine Translation

Sarti, G., Htut, P. M., Niu, X., Hsu, B., Currey, A., Dinu, G. and Nadejde, M. (2023b). RAMP: Retrieval and Attribute-Marking Enhanced Prompting for Attribute-Controlled Translation. In Proc. of the 61st Annual Meeting of the Association for Computational Linguistics (ACL) (Chapter 6)

Scalena, D.\(^\dagger\), Sarti, G.\(^\dagger\), Bisazza, A., Fersini, E. and Nissim, M. (2025). Steering Large Language Models for Machine Translation Personalization. Arxiv Preprint (Chapter 7)

Part III: Interpretability in Human Translation Workflows

Sarti, G., Bisazza, A., Guerberof-Arenas, A. and Toral, A. (2022). DivEMT: Neural Machine Translation Post-Editing Effort Across Typologically Diverse Languages. In Proc. of the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP) (Chapter 8)

Sarti, G., Zouhar, V., Chrupała, G., Guerberof-Arenas, A., Nissim, M. and Bisazza, A. (2025a). QE4PE: Word-level Quality Estimation for Human Post-Editing. Transactions of the Association for Computational Linguistics (TACL) (Chapter 9)

Sarti, G., Zouhar, V., Nissim, M. and Bisazza, A. (2025b). Unsupervised Word-level Quality Estimation for Machine Translation Through the Lens of Annotators (Dis)agreement. In Proc. of the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP) (Chapter 10)

I led the conceptualization, implementation, experimental evaluation, and manuscript writing for each article for which I am the sole first author. For articles with shared first authorship, I co-led the conceptualization, experimental design, and manuscript writing. In Qi^* et al. (2024), I also implemented the API for experimental evaluation. The background in Chapter 2 adapts parts of our primer on transformer interpretability (Ferrando et al., 2024), for which I contributed by surveying the literature and writing content regarding transformer architecture, input attribution methods, steering approaches, and interpretability tools.

1.2.2 Open-source Contributions

Open-source software proved fundamental to this thesis, providing a solid foundation for conducting reproducible experimental work. Notably, all investigations we conducted employed solely open-source tools, models and datasets, despite the current popularity of proprietary language models. Each chapter provides links to all datasets, models, code, and demos to encourage scrutiny and foster further research.

My most notable contribution to the open-source research ecosystem is the Inseq toolkit, presented in Chapter 3, for which I serve as development lead. The library now counts 430+ Github stars and 80+ citations across international venues.

I also contributed to the development of the following open-source projects:

The Groningen Translation Environment (GroTE), a Gradio-based UI for machine translation post-editing supporting the live recording of behavioral logs using the Hugging Face

datasetshub andspacesecosystem, developed with the help of Vilém Zouhar for the QE4PE study (Chapter 9). Available at https://github.com/gsarti/grote or viapip install grote.gradio-highlightedtextbox, a Svelte component for Gradio supporting text editing with highlighted spans, developed for collecting behavioral edit data in GroTE. Available at https://huggingface.co/spaces/gsarti/gradio_highlightedtextbox or viapip install gradio-highlightedtextbox.labl, a toolkit to facilitate token-level analyses of annotated texts with multiple edits and tokenization schemes, developed with the help of Vilém Zouhar for Chapter 10 analyses. Available at https://github.com/gsarti/labl or viapip install labl.Interpreto, a Python toolbox for concept-based interpretability analyses of language models maintained by the FOR/DEEL teams, which I helped design and develop as part of my visit to the IRT Saint Exupéry research institute in Toulouse, France. Interpreto is available at https://github.com/FOR-sight-ai/interpreto or via

pip install interpreto.

The full set of open-source contributions, including demos, models, and datasets, are available on GitHub and 🤗 Hugging Face.

1.2.3 Other Research Contributions

Beyond this dissertation’s scope, my research output included projects organized around two main themes:

Advancing Italian natural language processing:

Miaschi, A., Sarti, G., Brunato, D., Dell’Orletta, F. and Venturi, G. (2022). Probing Linguistic Knowledge in Italian Neural Language Models across Language Varieties. Italian Journal of Computational Linguistics (IJCoL)

Bianchi, F., Attanasio, G., Pisoni, R., Terragni, S., Sarti, G. and Balestri, D. (2023). Contrastive Language-Image Pre-training for the Italian Language. In Proc. of the 9th Italian Conference on Computational Linguistics (CLiC-it)

Sarti, G. and Nissim, M. (2024). IT5: Text-to-text Pretraining for Italian Language Understanding and Generation. In Proc. of the Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING)

Sarti, G., Caselli, T., Nissim, M. and Bisazza, A. (2024b). Non Verbis, Sed Rebus: Large Language Models Are Weak Solvers of Italian Rebuses. In Proc. of the 10th Italian Conference on Computational Linguistics (CLiC-it)

Sarti, G., Caselli, T., Bisazza, A. and Nissim, M. (2024a). EurekaRebus - Verbalized Rebus Solving with LLMs: A CALAMITA Challenge. In Proc. of the 10th Italian Conference on Computational Linguistics (CLiC-it)

Ciaccio, C., Sarti, G., Miaschi, A. and Dell’Orletta, F. (2025). Crossword Space: Latent Manifold Learning for Italian Crosswords and Beyond. In Proc. of the 11th Italian Conference on Computational Linguistics (CLiC-it)

Interpreting the inner workings of generative language models:

Langedijk, A., Mohebbi, H., Sarti, G., Zuidema, W. and Jumelet, J. (2024). DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers. In Findings of the North American Chapter of the Association for Computational Linguistics (NAACL Findings)

Edman, L., Sarti, G., Toral, A., van Noord, G. and Bisazza, A. (2024). Are Character-level Translations Worth the Wait? Comparing ByT5 and mT5 for Machine Translation. Trans. of the Association for Computational Linguistics (TACL)

Scalena, D., Sarti, G. and Nissim, M. (2024). Multi-property Steering of Large Language Models with Dynamic Activation Composition. In Proc. of the 7th Workshop on Analyzing and Interpreting Neural Networks for NLP (BlackboxNLP)

Ghasemi Madani, M. R., Gema, A. P., Sarti, G., Zhao, Y., Minervini, P. and Passerini, A. (2025). Noiser: Bounded Input Perturbations for Attributing Large Language Models. In Proc. of the 2nd Conference on Language Modeling (CoLM)

Candussio, S., Saveri, G., Sarti, G. and Bortolussi, L. (2025). Bridging Logic and Learning: Decoding Temporal Logic Embeddings via Transformers. In Proc. of the European Conference on Machine Learning and Principles of Knowledge Discovery in Databases (ECML-PKDD)

Islam, K. I. and Sarti, G. (2025). Reveal-Bangla: A Dataset for Cross-Lingual Multi-Step Reasoning Evaluation. In Proc. of the 2nd Workshop on Bangla Language Processing (BLP).

Fin also had the privilege of co-organizing the BlackboxNLP 2025 workshop2—the leading venue for NLP interpretability work—and contributing to its shared task on benchmarking mechanistic interpretability methods for circuit localization and causal variable identification in large language models:

Belinkov, Y., Mueller, A., Kim, N., Mohebbi, H., Chen, H., Arad, D., Sarti, G. (2025). Proceedings of the 8th BlackboxNLP Workshop: Analyzing and Interpreting Neural Networks for NLP.

Arad, D., Belinkov, Y., Chen, H., Kim, N., Mohebbi, H., Mueller, A., Sarti, G., Tutek, M. (2025). Findings of the BlackboxNLP 2025 Shared Task: Localizing Circuits and Causal Variables in Language Models. In Proc. of the 8th Workshop on Analyzing and Interpreting Neural Networks for NLP (BlackboxNLP)

Shared first co-authorship is indicated by \(^\dagger\).↩︎