Appendix B — Conditioning Generation for Personalized Machine Translation

B.1 Retrieval and Marking for Attribute-Controlled Translation

B.1.1 Prompt Templates

Formality-Controlled Translation *Here is a sentence: {src} Here is its lang translation written in a attr style: {tgt} The translated sentence conveys a attr style by using words such as ‘w1’, ‘w2’.

Gender-Controlled Translation Here is a sentence: {src} Here is its lang translation in which the person is attr: {tgt} In the translation, the attr gender of the person is made explicit by words such as ‘w1’, ‘w2’.

B.1.2 Full Per-language Results

- Table B.1: Detailed scores of same-language prompting on CoCoA-MT (preliminary evaluation).1

- Table B.2: Full results of same-language prompting on CoCoA-MT (full evaluation).

- Table B.3: Full results of same-language prompting on MT-GenEval (full evaluation).

- Table B.4: Full results of cross-lingual prompting on CoCoA-MT.

- Table B.5: Full results of cross-lingual prompting on MT-GenEval.

| BLEU | COMET | Sentential Accuracy | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 4 | 8 | 16 | 32 | 0 | 4 | 8 | 16 | 32 | 0 | 4 | 8 | 16 | 32 | ||

| BLOOM 7.1B | base | 22 | 29 | 30 | 31 | 21 | .16 | .58 | .59 | .60 | -.09 | .56 | .76 | .84 | .88 | .73 |

| Ramp | 31 | 32 | 33 | 25 | .60 | .61 | .62 | .15 | .84 | .89 | .91 | .84 | ||||

| XGLM 7.5B | base | 12 | 25 | 27 | 28 | 29 | -.53 | .44 | .45 | .50 | .52 | .52 | .76 | .84 | .85 | .89 |

| Ramp | 27 | 28 | 28 | 30 | .45 | .48 | .47 | .48 | .86 | .90 | .91 | .92 | ||||

| GPT-NeoX 20B | base | 23 | 28 | 29 | 29 | 29 | .11 | .27 | .27 | .27 | .28 | .56 | .80 | .85 | .85 | .95 |

| Ramp | 29. | 30 | 30 | 29 | .28 | .31 | .31 | .28 | .85 | .89 | .89 | .87 | ||||

| BLOOM 175B | base | 30 | 38 | 39 | 39 | -- | .48 | .73 | .74 | .75 | -- | .61 | .90 | .95 | .95 | -- |

| Ramp | 39 | 40 | 40 | -- | .74 | .74 | .76 | -- | .95 | .97 | .97 | -- | ||||

| ES | FR | HI | PT | AVG | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| F | I | F | I | F | I | F | I | ||||

| XGLM 7.5B | base | BLEU | 30.1 | 33.0 | 30.7 | 28.8 | 18.5 | 16.9 | 35.7 | 35.4 | 28.6 |

| COMET | 0.500 | 0.527 | 0.348 | 0.350 | 0.454 | 0.425 | 0.547 | 0.554 | 0.463 | ||

| L-Acc | 0.524 | 0.966 | 0.977 | 0.633 | 0.976 | 0.744 | 0.931 | 0.928 | 0.835 | ||

| S-Acc | 0.507 | 0.958 | 0.953 | 0.840 | 0.963 | 0.748 | 0.888 | 0.912 | 0.846 | ||

| +mark | BLEU | 31.0 | 33.2 | 29.4 | 27.4 | 19.2 | 18.6 | 35.7 | 35.5 | 28.7 | |

| COMET | 0.498 | 0.541 | 0.207 | 0.188 | 0.439 | 0.409 | 0.552 | 0.552 | 0.423 | ||

| L-Acc | 0.728 | 0.972 | 0.985 | 0.923 | 0.986 | 0.860 | 0.960 | 0.947 | 0.920 | ||

| S-Acc | 0.697 | 0.958 | 0.963 | 0.917 | 0.983 | 0.838 | 0.927 | 0.937 | 0.902 | ||

| Ramp | BLEU | 32.8 | 33.5 | 32.7 | 31.0 | 21.0 | 20.3 | 34.2 | 34.4 | 30.0 | |

| COMET | 0.480 | 0.511 | 0.314 | 0.302 | 0.502 | 0.491 | 0.488 | 0.522 | 0.451 | ||

| L-Acc | 0.842 | 0.963 | 0.989 | 0.926 | 0.993 | 0.885 | 0.961 | 0.943 | 0.938 | ||

| S-Acc | 0.803 | 0.952 | 0.975 | 0.922 | 0.98 | 0.873 | 0.928 | 0.948 | 0.923 | ||

| BLOOM 175B | base | BLEU | 44.3 | 45.0 | 42.9 | 41.0 | 27.1 | 25.8 | 47.3 | 45.7 | 39.9 |

| COMET | 0.728 | 0.759 | 0.611 | 0.600 | 0.673 | 0.645 | 0.762 | 0.750 | 0.691 | ||

| L-Acc | 0.795 | 0.960 | 0.987 | 0.890 | 0.978 | 0.885 | 0.987 | 0.954 | 0.930 | ||

| S-Acc | 0.889 | 0.963 | 0.987 | 0.888 | 0.980 | 0.863 | 0.987 | 0.960 | 0.940 | ||

| +mark | BLEU | 45.8 | 44.5 | 43.3 | 41.8 | 28.4 | 27.1 | 46.4 | 45.3 | 40.3 | |

| COMET | 0.726 | 0.745 | 0.610 | 0.594 | 0.677 | 0.659 | 0.751 | 0.745 | 0.688 | ||

| L-Acc | 0.930 | 0.987 | 0.996 | 0.958 | 0.995 | 0.936 | 0.989 | 0.972 | 0.970 | ||

| S-Acc | 0.942 | 0.985 | 0.992 | 0.957 | 0.992 | 0.925 | 0.990 | 0.977 | 0.970 | ||

| Ramp | BLEU | 46.4 | 46.2 | 43.9 | 42.9 | 30.8 | 29.2 | 48.8 | 47.4 | 41.9 | |

| COMET | 0.718 | 0.759 | 0.611 | 0.610 | 0.721 | 0.713 | 0.782 | 0.771 | 0.711 | ||

| L-Acc | 0.956 | 0.984 | 0.998 | 0.952 | 0.991 | 0.947 | 0.993 | 0.962 | 0.973 | ||

| S-Acc | 0.957 | 0.982 | 0.995 | 0.945 | 0.993 | 0.935 | 0.990 | 0.967 | 0.970 | ||

| Adapted MT | BLEU | 44.4 | 43.7 | 43.4 | 37.8 | 19.1 | 17.0 | 53.0 | 49.9 | 38.5 | |

| COMET | 0.712 | 0.724 | 0.559 | 0.547 | -0.191 | -0.263 | 0.783 | 0.764 | 0.454 | ||

| L-Acc | 0.697 | 0.598 | 0.822 | 0.377 | 0.869 | 0.449 | 0.972 | 0.744 | 0.691 | ||

| S-Acc | 0.700 | 0.600 | 0.810 | 0.400 | 0.680 | 0.600 | 0.950 | 0.800 | 0.693 | ||

| AR | ES | FR | HI | PT | AVG | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F | M | F | M | F | M | F | M | F | M | ||||

| XGLM 7.5B | base | BLEU | 7.6 | 7.5 | 35.5 | 38.2 | 27.1 | 28.6 | 13.8 | 16.4 | 29.2 | 33.1 | 23.7 |

| COMET | -.040 | -.012 | .694 | .738 | .509 | .555 | .304 | .332 | .661 | .713 | .445 | ||

| L-Acc | .848 | .947 | .688 | .808 | .715 | .880 | .585 | .956 | .621 | .855 | .790 | ||

| S-Acc | .617 | .866 | .651 | .938 | .581 | .920 | .303 | .962 | .494 | .934 | .727 | ||

| +mark | BLEU | 7.7 | 7.8 | 35.4 | 38.2 | 27.5 | 28.7 | 14.0 | 16.7 | 29.1 | 32.4 | 23.7 | |

| COMET | -.038 | -.020 | .704 | .735 | .508 | .556 | .300 | .317 | .663 | .714 | .444 | ||

| L-Acc | .868 | .939 | .665 | .811 | .701 | .881 | .581 | .955 | .626 | .860 | .789 | ||

| S-Acc | .664 | .856 | .612 | .937 | .562 | .919 | .355 | .966 | .519 | .927 | .732 | ||

| Ramp | BLEU | 9.2 | 8.8 | 37.5 | 39.4 | 27.5 | 29.2 | 14.8 | 16.6 | 31.4 | 33.3 | 24.8 | |

| COMET | .037 | .043 | .723 | .759 | .528 | .571 | .325 | .337 | .681 | .723 | .473 | ||

| L-Acc | .939 | .961 | .750 | .806 | .781 | .885 | .667 | .956 | .759 | .854 | .836 | ||

| S-Acc | .836 | .901 | .722 | .936 | .716 | .937 | .509 | .974 | .729 | .940 | .820 | ||

| BLOOM 175B | base | BLEU | 14.8 | 16.9 | 45.6 | 50.3 | 38.1 | 41.7 | 20.8 | 24.6 | 37.6 | 42.2 | 33.3 |

| COMET | .282 | .395 | .837 | .892 | .719 | .770 | .599 | .629 | .807 | .861 | .679 | ||

| L-Acc | .665 | .966 | .578 | .814 | .660 | .902 | .480 | .951 | .594 | .872 | .748 | ||

| S-Acc | .411 | .934 | .515 | .965 | .581 | .961 | .212 | .973 | .525 | .960 | .704 | ||

| +mark | BLEU | 15.2 | 17.1 | 45.8 | 50.0 | 37.9 | 41.3 | 20.3 | 23.8 | 37.6 | 42.2 | 33.1 | |

| COMET | .294 | .387 | .843 | .887 | .712 | .767 | .576 | .606 | .807 | .861 | .674 | ||

| L-Acc | .707 | .969 | .610 | .818 | .663 | .902 | .493 | .958 | .594 | .872 | .759 | ||

| S-Acc | .482 | .936 | .568 | .973 | .588 | .962 | .284 | .974 | .525 | .960 | .725 | ||

| Ramp | BLEU | 16.7 | 17.6 | 47.9 | 50.2 | 39.5 | 41.8 | 22.2 | 25.0 | 39.3 | 42.7 | 34.3 | |

| COMET | .358 | .407 | .860 | .895 | .734 | .787 | .632 | .646 | .810 | .858 | .699 | ||

| L-Acc | .841 | .972 | .709 | .809 | .765 | .906 | .633 | .953 | .701 | .886 | .817 | ||

| S-Acc | .721 | .940 | .707 | .964 | .732 | .971 | .518 | .973 | .683 | .972 | .818 | ||

| Adapted MT | BLEU | 23.3 | 24.4 | 53.2 | 54.2 | 44.2 | 46.4 | 29.3 | 32.3 | 43.4 | 45.7 | 35.9 | |

| COMET | .496 | .522 | .876 | .902 | .759 | .797 | .722 | .743 | .825 | .857 | .528 | ||

| L-Acc | .910 | .981 | .932 | .921 | .919 | .956 | .762 | .837 | .922 | .961 | .853 | ||

| S-Acc | .940 | .970 | .910 | .960 | .950 | .960 | .280 | .750 | .930 | .990 | .863 | ||

| ES | FR | HI | PT | AVG | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| F | I | F | I | F | I | F | I | ||||

| BLOOM 175B | base | BLEU | 40.9 | 46.3 | 33.7 | 32.0 | 21.8 | 18.9 | 33.9 | 29.0 | 32.1 |

| COMET | 0.785 | 0.823 | 0.611 | 0.615 | 0.409 | 0.436 | 0.772 | 0.705 | 0.644 | ||

| L-Acc | 0.211 | 0.990 | 0.899 | 0.656 | 0.944 | 0.123 | 0.704 | 0.010 | 0.567 | ||

| S-Acc | 0.200 | 0.930 | 0.880 | 0.715 | 0.940 | 0.100 | 0.975 | 0.025 | 0.596 | ||

| Ramp | BLEU | 39.4 | 44.6 | 35.3 | 34.7 | 22.4 | 18.4 | 32.2 | 27.5 | 31.8 | |

| COMET | 0.749 | 0.788 | 0.575 | 0.614 | 0.488 | 0.480 | 0.770 | 0.702 | 0.646 | ||

| L-Acc | 0.169 | 0.978 | 0.949 | 0.770 | 0.973 | 0.143 | 1.000 | 0.015 | 0.625 | ||

| S-Acc | 0.175 | 0.950 | 0.930 | 0.790 | 0.975 | 0.140 | 0.975 | 0.040 | 0.622 | ||

| AR | ES | FR | HI | PT | AVG | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F | M | F | M | F | M | F | M | F | M | ||||

| BLOOM 175B | base | BLEU | 10.6 | 11.6 | 43.3 | 47.4 | 34.2 | 38.2 | 11.4 | 15.0 | 34.4 | 38.6 | 28.5 |

| COMET | .071 | .138 | .805 | .857 | .648 | .719 | -.135 | -.003 | .766 | .822 | .469 | ||

| L-Acc | .843 | .956 | .627 | .810 | .561 | .899 | .653 | .962 | .588 | .874 | .777 | ||

| S-Acc | .541 | .785 | .529 | .936 | .389 | .944 | .051 | .745 | .475 | .939 | .633 | ||

| Ramp | BLEU | 10.0 | 10.5 | 44.6 | 47.8 | 35.7 | 39.1 | 13.9 | 16.6 | 36.0 | 39.4 | 29.4 | |

| COMET | -.044 | .020 | .818 | .860 | .686 | .739 | .139 | .212 | .779 | .816 | .502 | ||

| L-Acc | .845 | .956 | .660 | .815 | .608 | .900 | .574 | .961 | .680 | .882 | .788 | ||

| S-Acc | .479 | .703 | .605 | .953 | .497 | .956 | .105 | .870 | .613 | .951 | .673 | ||

B.1.3 Error Analysis of Cross-Lingual Prompting

Table B.6 shows two examples where Ramp performs significantly worse than the base model in terms of comet. In the first example, having multiple in-context examples containing “million” led the model to mis-translate “billion” to “million”. In the second example, we observe that the color related in-context examples led the model to produce hallucinated output about clothing colors.

Repeated misleading in-context examples are less observed on MT-GenEval and in the same-language setting because (1) CoCoA-MT translates the same set of English sentences to different languages while MT-GenEval collects English sentences independently; (2) There are no duplicated source (English) sentences for each language. (Therefore, if Ramp retrieves duplicated English sentences as in Table B.6, their reference translations are guaranteed to be in different languages.)

| In-context examples (EN) | Maybe he should. What did you think about that guy findin 3 million dollars worth of old baseball cards in his grandpas attic. |

| Yeah that makes sense, did you heard about the $10 million bunker he has? | |

| I have. I heard that he started a library in 1895 with 32,000 books in it. All from his personal collection. Can you imagine? | |

| Yeah that makes sense, did you heard about the $10 million bunker he has? | |

| Yeah that makes sense, did you heard about the $10 million bunker he has? | |

| Maybe he should. What did you think about that guy findin 3 million dollars worth of old baseball cards in his grandpas attic. | |

| That is really expensive I agree, did you watch the Lego Batman movie? | |

| Yeah that makes sense, did you heard about the $10 million bunker he has? | |

| That is crazy. Do you like Tom Hanks, he’s grossed over 8.5 billion at the box office | |

| That is really expensive I agree, did you watch the Lego Batman movie? | |

| That is crazy. Do you like Tom Hanks, he’s grossed over 8.5 billion at the box office | |

| That is crazy. Do you like Tom Hanks, he’s grossed over 8.5 billion at the box office | |

| He doesnt look like he has 56 years! I heard he made 75000000 from Mission Impossible 3 | |

| Really? I guess he made a valuable contribution to science and also to medicine, did you hear of that species of flying snakes | |

| Input (EN) | If you got it why not? He is worth over 20 billion dollars after all. |

| Reference (ES) | Si lo tiene, ¿por qué no? Al fin y al cabo, vale más de 20 000 millones de dólares. |

| Ramp (ES) | Si lo tienes, ¿por qué no? Es millonario después de todo. |

| base (ES) | Si lo tienes, ¿por qué no? Él vale más de 20 mil millones de dólares después de todo. |

| In-context examples (EN) | thats such a fun thing to have in your code of conduct. are you more of a dog person than cat person? |

| For sure lol, it was so nice talking with you, say hi to your cats for me! | |

| thats such a fun thing to have in your code of conduct. are you more of a dog person than cat person? | |

| What can’t dogs do! I know they aren’t color blind like we were taught when young. It was so nice chatting with you, tell yuki hi! | |

| For sure lol, it was so nice talking with you, say hi to your cats for me! | |

| Oh yeah. That sucker was mean let me tell you. I think I would have a rabid dog chase me, which by the way dogs are not color blind! | |

| Thanks you too! And watch out for dogs, they’re not actually colorblind but they just don’t see as many colors as a normal human can | |

| LOL That’s funny! Google prefers dogs over cats! The wrote in their code of conduct that they are a dog company. It’s been fun chatting with you! | |

| Such gorgeous dogs! Do you & your dogs live in a northern climate? | |

| LOL That’s funny! Google prefers dogs over cats! The wrote in their code of conduct that they are a dog company. It’s been fun chatting with you! | |

| thats such a fun thing to have in your code of conduct. are you more of a dog person than cat person? | |

| Such gorgeous dogs! Do you & your dogs live in a northern climate? | |

| haha me too! I heard that they wore clothes that were full of color. Surprised me because I always thought of them as wearing black and white. | |

| For sure lol, it was so nice talking with you, say hi to your cats for me! | |

| Input (EN) | lol I had an African grey that could say everything I said around the house. Like clean your room! as well any loud sounds. |

| Reference (PT) | “lol Tinha um papagaio-cinzento que conseguia dizer tudo o que ouvia em casa. Tipo”Limpe o seu quarto!” e também quaisquer sons altos” |

| Ramp (PT) | haha eu também! Eu ouvi dizer que eles usam roupas de todas as cores. Surpreendeu-me porque eu sempre pensei neles usando preto e branco. |

| base (PT) | hahaha eu tinha um papagaio cinza africano que dizia tudo o que eu dizia em casa. Como limpar o quarto! Bem como qualquer som alto. |

B.2 Steering Language Models for Machine Translation Personalization

B.2.1 Experiments Reproducibility

In this section, we provide every parameter we use for the reproducibility of our experiments setups.

B.2.1.1 Base Prompt

We use the same prompt template across all methods: ZS (which corresponds to the original model translation), ZS-Exp.\(_\text{HT}\), ZS-Exp.\(_\text{PT}\) (detailed in Section B.2.1.4), MS, ActAdd, ReFT, and SAE-based contrastive setups. This prompt, shown in Listing B.3, instructs the model to translate the source sentence while explicitly preventing it from adding any explanations about the translation process. Since all test models are Instruction Tuned, we utilize their native chat templates to preprocess the input accordingly. For multi-shot examples, the user and assistant turns are repeated for each example, always using the same prompt structure.

B.2.1.2 ReFT Training

ReFT training was conducted using the PyReFT toolkit from the original authors2. We applied the intervention at the same hook point used by other steering methods - specifically, the layer output corresponding to the residual stream at the selected layer. The training configuration includes a low_rank_dimension of 4, lora_alpha set to 32, and a lora_dropout of 0.05. ReFT was trained on the same 20 prompts used in the MS setup, for a total of 100 epochs.

B.2.1.3 SAE Cont.\(_\text{HT}\) and SAE Cont.\(_\text{PT}\)

We use the NNsight library (Fiotto-Kaufman et al., 2025) to extract and manipulate model activations for all steering experiments. The source code is publicly available in the repository linked in the main body of this paper. For consistency, we use the same set of contrastive examples employed in the MS approach.

B.2.1.4 ZS-Exp.\(_\text{HT}\) and ZS-Exp.\(_\text{PT}\)

For both the ZS-Exp.\(_\text{HT}\) and ZS-Exp.\(_\text{PT}\) setups, we used GPT-4o (June 2025) to generate explanations detailing the stylistic differences between a base translation and a target human translation. The prompt template used for this task is shown in Listing B.1, using the same 20 examples as in the MS, SAE Cont.\(_\text{HT}\), and SAE Cont.\(_\text{PT}\) setups.

All outputs were manually inspected to ensure no verbatim excerpts from the provided examples were present, avoiding any risk of data leakage. Example outputs for different novels are shown in Listing B.2.

Finally, these generated guidelines are used to prompt the evaluated models, following the template shown in Listing B.3.

Objective - Identify stylistic choices in translations for personalization purposes.

You will be provided with a source text, a standard translation, and a target translation by a specific translator whose style we want to emulate.

Your task is to analyze the 'Target translation' by comparing it to the 'Base translation' and the 'Source text'.

Identify and list the distinctive stylistic patterns, choices, and preferences exhibited in the Target translation.

These stylistic cues should help another translator (or an AI) to adapt their translations to match the style of the target translator.

Source text: ____

Base translation: ____

Target translation: ____

[... Repeat Source, Base and Target]

Please extract a concise list of key stylistic cues. Focus on aspects such as vocabulary choices, sentence structure, tone and register, handling of cultural nuances, punctuation/formatting preferences and overall creativity.

Output a short list of stylistic cues as bullet points. Write the list as if you were directly giving the guidelines to the translator and avoid using specific examples.[When comparing H1 and MT for Beware of Pity (German)]

- Maintain a tone that is professional, thoughtful, and subtly persuasive.

- Avoid overly technical jargon unless necessary; explain specialized terms briefly if used.

- Preserve the author's voice, keeping a balance between academic rigor and narrative engagement.

- Ensure smooth transitions between sentences and paragraphs to support coherent argumentation.

- Translate idiomatic expressions in a way that retains their intended effect, even if the wording differs.

[When comparing H1 and H2 for Beware of Pity (German)]

- Keep the tone warm, welcoming, and direct - avoid overly formal or distant language.

- Use active voice wherever possible to maintain energy and engagement.

- Avoid idioms or expressions that may not translate culturally; aim for universal accessibility.

- Maintain consistent tone and register throughout, adapting to the intended audience's familiarity with the subject.

- Respect the rhythm and structure of the original, but feel free to adjust sentence length for readability.

[When comparing H1 and H2 for Crime and Punishment (Russian)]

- Prefer dynamic over formal vocabulary: Opt for vivid or emotionally charged words when available.

- Add tonal nuance and emotional shading: Enrich dialogues and narration with subtle shifts in tone, especially sarcasm, understatement, or irony, to match character voice or mood.

- Use contractions and familiar phrasing: Employ contractions and relaxed expressions to preserve spoken character.

- Expand or rephrase for clarity and voice: Don't hesitate to slightly reword or elaborate if it strengthens tone, clarifies intent, or enhances character differentiation.

- Favor rhythmic, flowing sentence structure: Break long, formal sentences into multiple shorter clauses or use punctuation (dashes, ellipses) for dramatic or emotional effect.

[...]Translate the following sentence between the angular parentheses into English.

[if setup == ZS-Exp]

[Follow the following guidelines when translating: <explanations here>]

The original sentence is: ____.

Remember to write only the translation, without any additional text or explanation.B.2.2 All Models Results

B.2.2.1 Full Prompting and Steering Results

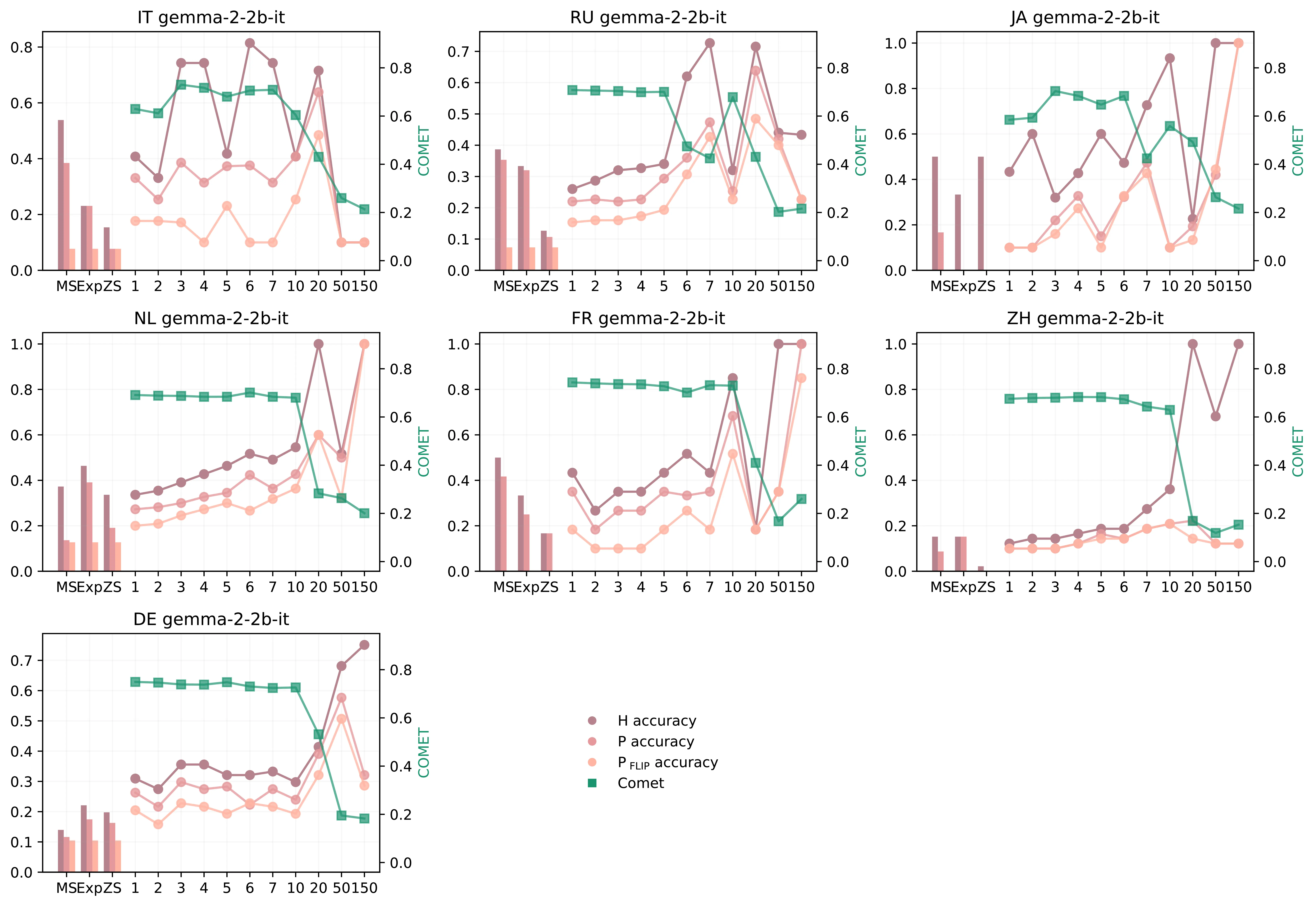

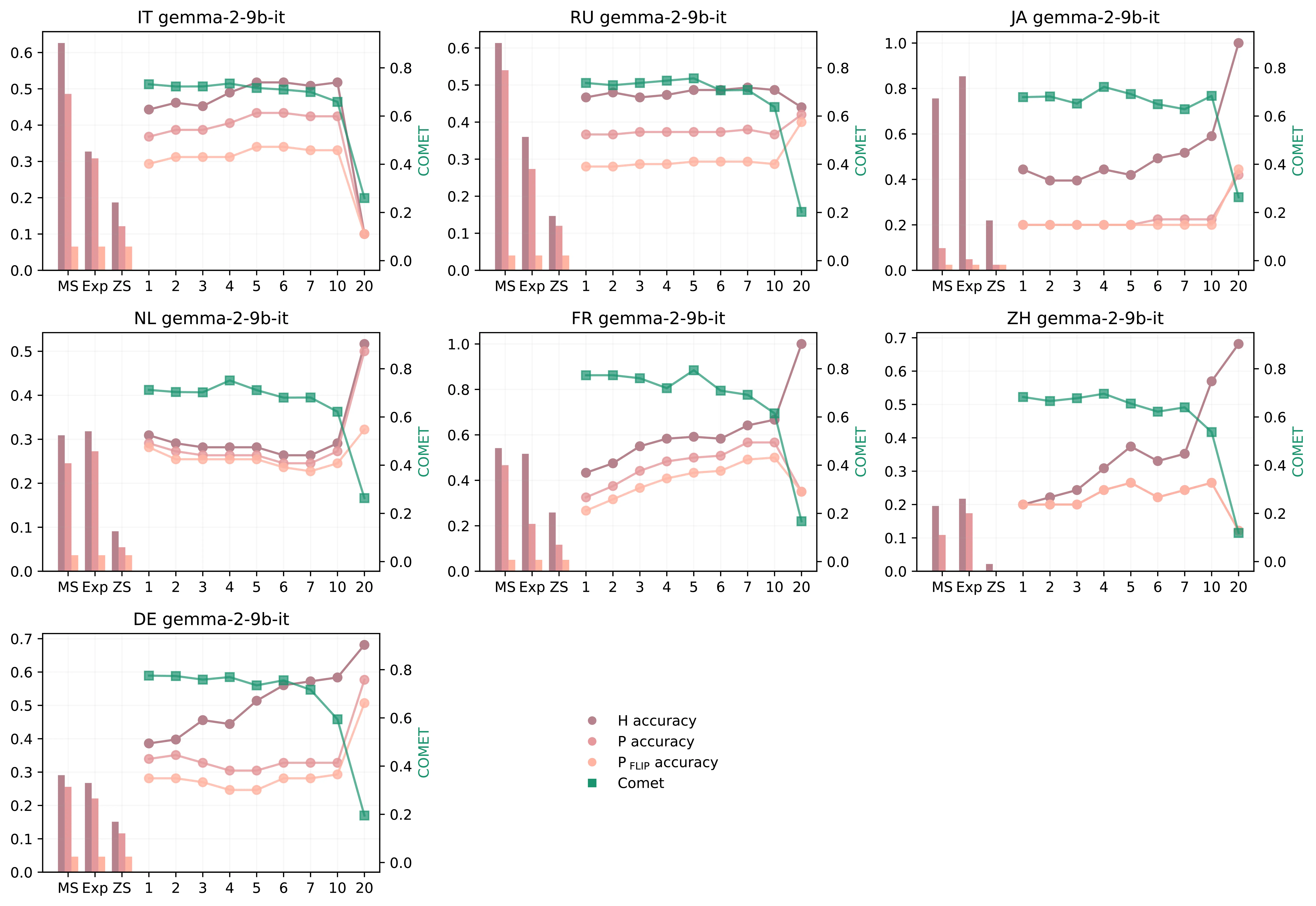

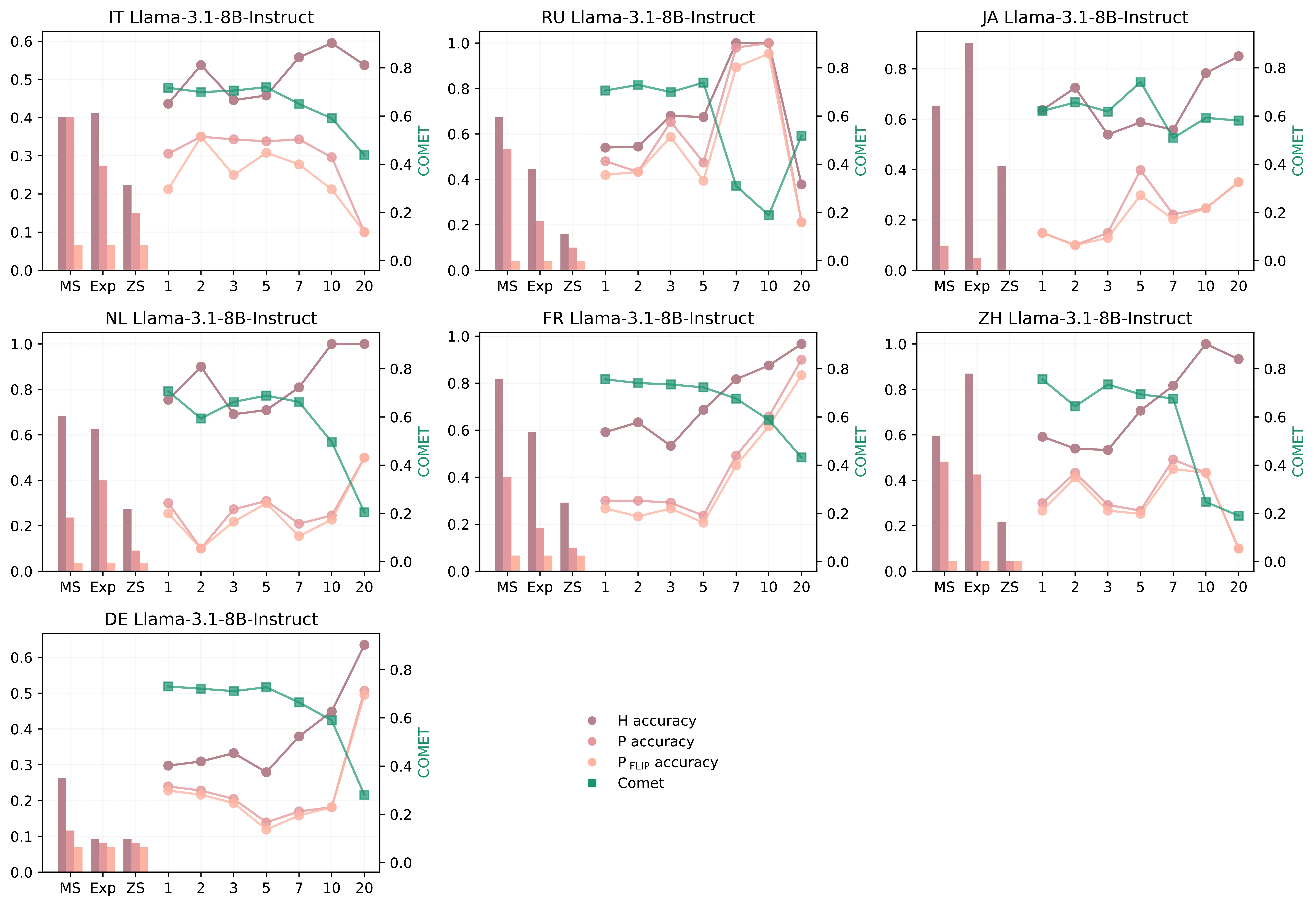

We present detailed plots of the results for each novel across the three evaluated models in Figure B.1 (Gemma 2 2B), Figure B.2 (Gemma 2 9B), and Figure B.3 (Llama 3.1 8B). These plots display the performance of all evaluated methods, reporting the three submetrics: H accuracy (general human-likeness), P accuracy (translator-specific accuracy), and P\(_\text{flip}\) (personalized flip accuracy), alongside the corresponding comet scores measuring translation quality.

B.2.3 Dataset and Generation Examples

We present in Table B.7 and Table B.8 a selection of examples from two different languages, showcasing outputs from each of the tested setups. For each example, we also report the corresponding classification label predicted by the classifier and the associated comet score. Additionally

| Source/Method | Translation | 📊 Classifier | ☄️ COMET |

|---|---|---|---|

| Source (ZH) | 遂又往後看時,只見畫著一張弓,弓上掛著香櫞。也有一首歌詞云:「二十年來辨是非,榴花開處照宮闈。三春爭及初春景,虎兕相逢大夢歸。」 | ||

| H\(_1\) | Forthwith, therefore, he prosecuted a further perusal of what came next, when he caught sight of a picture of a bow. On this bow hung a citron. There was also this ode: Full twenty years right and wrong to expound will be thy fate! What place pomegranate blossoms come in bloom will face the Palace Gate! The third portion of spring, of the first spring in beauty short will fall! When tiger meets with hare thou wilt return to sleep perennial. | H\(_1\) | - |

| H\(_2\) | The picture that followed was of a bow with a citron hanging from it, followed by what looked like the words of a song: You shall, when twenty years in life's hard school are done, In pomegranate-time to palace halls ascend. Though three springs never could with your first spring compare, When hare meets tiger your great dream shall end. | H\(_2\) | - |

| Gemma 2 2B | When he turned back to look, he saw a bow hanging on it, with a plum blossom. There was also a poem that said: "For twenty years, I have been trying to distinguish right from wrong, and the plum blossoms bloom in the palace. In the spring, the tigers and the rhinoceroses meet, and they dream of returning home." | MT | 0.49 |

| Gemma 2 9B | Looking back, he saw a painting of a bow with a quiver hanging on it. There was also a line of lyrics that said: "For twenty years, I have distinguished right from wrong, and the blossoming of the pomegranate flowers illuminates the imperial court. Three springs have passed, and now it is the beginning of spring again, like a dream where tigers and rhinoceroses meet. | MT | 0.56 |

| Llama 3.1 8B | As I looked back, I saw a painting of a bow with a censer on it. There was also a poem that said: "Twenty years of distinguishing right from wrong, the lotus flower blooms in the palace. Three springs competing with the scenery of the first spring, the tiger and the rhinoceros meet and return to their dream." | MT | 0.53 |

| ZS-Exp.\(_\text{HT}\) | And as he turned his gaze back upon the scene once more, there was displayed an archer's bow strung tautly; upon its surface hung a fragrant plum blossom. A poem also recited thus spake: "For twenty years I have sought truth amidst strife, where blooming durian flowers illuminate the palace walls. In spring's embrace, they vie for beauty, tigers clashing in dreams of return." | MT | 0.60 |

| ZS-Exp.\(_\text{PT}\) | And as he turned his gaze back upon the scene once more, there was displayed an archer's bow strung tautly; upon its surface hung a fragrant plum blossom. A poem also recited thus: "For twenty years I have sought truth amidst strife, where blooming durian flowers illuminate the palace walls. In spring's embrace, they vie for beauty, tigers clashing in dreams of return." | MT | 0.61 |

| MS | Then, turning around, he noticed a picture depicting a bow hanging on its string, adorned with incense sticks. A verse read: "For twenty years I've tried to discern truth from falsehood, and wherever fragrant plum blossoms bloom, the palace gates shine brightly." | H\(_1\) | 0.65 |

| ActAdd | When he turned back to look, he saw a bow hanging on it, with a plum blossom. There was also a poem that said: "For twenty years, I have been trying to tell right from wrong, and the plum blossoms bloom in the palace. In spring, the tigers and rhinoceroses meet, and they dream of going back home." | MT | 0.53 |

| ReFT | When he turned back to look, he saw a bow hanging there, adorned with a plum blossom. There was also a poem that said: "For twenty years, I have tried to distinguish right from wrong, and plum blossoms bloom within the palace. In spring, tiger and rhinoceros meet, dreaming of their return home." | MT | 0.58 |

| SAE Cont.\(_\text{HT}\) (\(\alpha=1\)) | When he turned back to look, he saw a bow hung upon it, with a plum blossom. There was also a verse which read: "For twenty years, I have struggled to discern truth from falsehood, and the plums blossomed where the imperial court resided. During the three springs, the tiger and rhinoceros met, dreaming of their return." | MT | 0.62 |

| SAE Cont.\(_\text{HT}\) (\(\alpha=5\)) | He looked back and there hung a bow adorned with a plum blossom. A verse read: "Twenty years I've struggled for truth, where fragrant plums bloom near the imperial court. Spring arrives, tiger and rhino clash, dreaming of their return. | MT | 0.62 |

| SAE Cont.\(_\text{HT}\) (\(\alpha=50\)) | The when the veryShellTruth lookWarmTheTheTheTigeratPoint [...] | MT | 0.11 |

| SAE Cont.\(_\text{PT}\) (\(\alpha=5\)) | He looked back and saw a bow adorned with fragrant citron. A verse declared: "Twenty years I've weighed right and wrong; where pomegranate blossoms bloom, they light the palace gates. Spring's full bloom cannot match early spring's grace. When tiger meets rhino, one returns to the great dream." | H\(_1\) | 0.65 |

| Source/Method | Translation | 📊 Classifier | ☄️ COMET |

|---|---|---|---|

| Source (IT) | Intanto la stalla fu chiusa e Pinocchio rimase solo: e perché erano molte ore che non aveva mangiato, cominciò a sbadigliare dal grande appetito. E, sbadigliando, spalancava una bocca che pareva un forno. | ||

| H\(_1\) | When the stable door was closed, Pinocchio was left alone. And since he hadn't eaten in many hours, he began to yawn from hunger. And when he yawned, his mouth opened as wide as an oven. | H\(_1\) | - |

| H\(_2\) | The stable was then shut and Pinocchio was left alone. He had not eaten for many hours, and he began to yawn from hunger. And when he yawned he opened a mouth that seemed as wide as an oven. | H\(_2\) | - |

| Gemma 2 2B | At that moment the stable was closed and Pinocchio was left alone, and because it had been many hours since he had eaten, he began to yawn from a great hunger. And, yawning, he opened a mouth that seemed like a furnace. | MT | 0.79 |

| Gemma 2 9B | Meanwhile, the stable was closed and Pinocchio was left alone: and because it had been many hours since he had eaten, he began to yawn from his great hunger. And, yawning, he opened a mouth that looked like an oven. | MT | 0.80 |

| Llama 3.1 8B | Meanwhile the stable was closed and Pinocchio was left alone: and because it was many hours since he hadn't eaten, he began to yawn from great hunger. And, yawning, he opened a mouth that seemed like an oven. | MT | 0.80 |

| ZS-Exp.\(_\text{HT}\) | Meanwhile, the stable door was shut, leaving Pinocchio alone. His tummy rumbled because it had been hours since his last meal, and he started yawning widely from his hunger. With each yawn, his mouth opened like an oven. | MT | 0.78 |

| ZS-Exp.\(_\text{PT}\) | Meanwhile, the stable door was shut, leaving Pinocchio all alone. His tummy rumbled because it had been hours since his last meal, and he started yawning widely from sheer hunger. With each yawn, his mouth gaped open like an oven. | H\(_2\) | 0.80 |

| MS | Meanwhile, the stable door was shut and Pinocchio was left alone. Since it had been hours since he last ate, he began yawning from hunger. Each yawn opened his mouth wide enough to look like an oven. | H\(_2\) | 0.81 |

| ActAdd | Meanwhile, the stable was closed and Pinocchio remained alone. Because many hours passed without eating, he started yawning from very big hunger. And while yawning, he opened his mouth wide like an oven. | MT | 0.75 |

| ReFT | Meanwhile, the stable was closed and Pinocchio stayed alone. Because many hours had passed since he last ate, he began to yawn from big hunger. And, yawning, he opened a mouth that looked like an oven. | MT | 0.77 |

| SAE Cont.\(_\text{HT}\) (\(\alpha=1\)) | Meanwhile, the stable door was shut and Pinocchio was left alone. Because it had been many hours since he had eaten, he started to yawn with intense hunger. And, as he yawned, his mouth gaped open like an oven. | MT | 0.79 |

| SAE Cont.\(_\text{HT}\) (\(\alpha=5\)) | Meanwhile, the stable door shut, leaving Pinocchio all alone. Because it had been many hours since he'd eaten, he started to yawn with intense hunger. As he yawned, his mouth gaped open, looking like an oven. | H\(_2\) | 0.82 |

| SAE Cont.\(_\text{HT}\) (\(\alpha=50\)) | He He "It The ... You It "[They They) THE...I I HE IT [There There THEYRE WE "A A FOR "[...] | MT | 0.14 |

| SAE Cont.\(_\text{PT}\) (\(\alpha=5\)) | The stable was then shut, leaving Pinocchio all alone. He had not eaten for many hours and began to yawn from great hunger. As he yawned, he opened his mouth wide, like an oven. | H\(_2\) | 0.84 |