Abstract

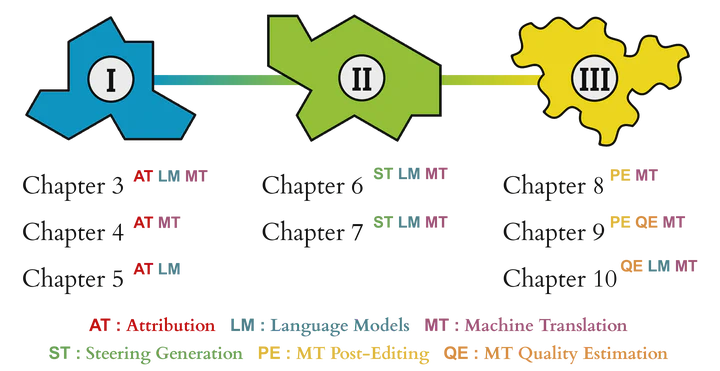

This dissertation investigates how we can make AI translation systems more transparent, controllable, and useful for professional translators. As machine translation becomes increasingly prevalent in our globalized world, understanding and controlling these systems is crucial to ensuring accurate, culturally appropriate translations. The research develops new methods to peek inside the ‘black box’ of AI translation models to understand how they make decisions. In this thesis, we develop open-source tools and methodological frameworks to quantify how language models rely on context to generate text, and how this can sometimes result in unwanted biases. We then propose novel techniques that allow users to control translation styles — for instance, making translations sound more formal or informal, or mimicking the writing style of specific human translators. This is particularly valuable for literary translation, where preserving an author’s voice is essential. The research also examines how the inner workings of language models can inform the daily work of professional translators. Through studies with translators working across diverse language families, our findings show that machine translation effectiveness varies dramatically depending on language similarities. We then develop new methods to automatically highlight potential translation errors by accounting for the uncertainty of machine translation models, helping translators work more efficiently while maintaining quality. Overall, this dissertation bridges the gap between scientific insights into how language models work and practical benefits for users of these systems, paving the way for better human-AI interaction practices for professional translators and everyday users worldwide.