Steering Large Language Models for Machine Translation Personalization

We evaluate prompting and steering based methods for machine translation personalization in the literary domain.

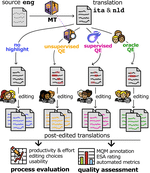

Unsupervised Word-level Quality Estimation for Machine Translation Through the Lens of Annotators (Dis)agreement

We evaluate unsupervised word-level quality estimation (WQE) methods for machine translation, focusing on their robustness to human …

QE4PE: Word-level Quality Estimation for Human Post-Editing

We investigate the impact of word-level quality estimation on MT post-editing with 42 professional post-editors.

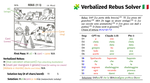

Non Verbis, Sed Rebus: Large Language Models are Weak Solvers of Italian Rebuses

We evaluate the rebus-solving capabilities of large language models on a new Italian dataset.

Multi-property Steering of Large Language Models with Dynamic Activation Composition

We propose Dynamic Activation Composition, an adaptive approach for multi-property activation steering of LLMs

Model Internals-based Answer Attribution for Trustworthy Retrieval-Augmented Generation

MIRAGE uses model internals for faithful answer attribution in retrieval-augmented generation applications.

A Primer on the Inner Workings of Transformer-based Language Models

This primer provides a concise technical introduction to the current techniques used to interpret the inner workings of …

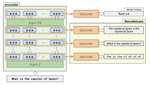

DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers

We propose DecoderLens, a method to interpret the iterative refinement of representations in encoder-decoder Transformer models.

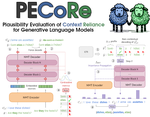

Quantifying the Plausibility of Context Reliance in Neural Machine Translation

We introduce PECoRe, an interpretability framework for identifying context dependence in language model generations.

RAMP: Retrieval and Attribute-Marking Enhanced Prompting for Attribute-Controlled Translation

We introduce Retrieval and Attribute-Marking enhanced Prompting (RAMP) to perform attribute-controlled MT with multilingual LLMs.

Are Character-level Translations Worth the Wait? Comparing ByT5 and mT5 for Machine Translation

We analyze input contributions of char-level MT models and show how they modulate word and character-level information.

Inseq: An Interpretability Toolkit for Sequence Generation Models

We present Inseq, a Python library to democratize access to interpretability analyses of sequence generation models.

Probing Linguistic Knowledge in Italian Neural Language Models across Language Varieties

We investigate whether and how using different architectures of probing models affects the performance of Italian transformers in …

That Looks Hard: Characterizing Linguistic Complexity in Humans and Language Models

This paper investigates the relationship between two complementary perspectives in the human assessment of sentence complexity and how …

Teaching NLP with Bracelets and Restaurant Menus: An Interactive Workshop for Italian Students

We developed an interactive workshop designed to illustrate the NLP and computational linguistics to Italian high schoolers.

UmBERTo-MTSA@ AcCompl-It: Improving Complexity and Acceptability Prediction with Multi-task Learning on Self-Supervised Annotations

This work describes a self-supervised data augmentation approach used to improve learning models’ performances when only a …

Interpreting Neural Language Models for Linguistic Complexity Assessment

This thesis presents a model-driven study of multiple phenomena associated with linguistic complexity, and how those get encoded by …