3 Complexity Phenomena in Linguistic Annotations and Language Models

This chapter investigates the relationship between online gaze metrics and offline perceived complexity judgments by studying how the two viewpoints are represented by a neural language model trained on human-produced data. First, a preliminary analysis of linguistic phenomena associated with the two complexity viewpoints is performed, highlighting similarities and differences across metrics. The effectiveness of a regressor based on explicit linguistic features is then evaluated for sentence complexity prediction and compared to the results obtained by a fine-tuned neural language model with contextual representations. In conclusion, the linguistic competence inside the language model’s embeddings is probed before and after fine-tuning, showing how linguistic information encoded in representations changes as the model learns to predict complexity.

Given the conceptual similarity between raw cognitive processing and human perception of complexity, this chapter investigates whether the relation between eye-tracking metrics and complexity judgments can be highlighted empirically in human annotations and language model representations. With this aim, linguistic features associated with various sentence-level structural phenomena are analyzed in terms of their correlation with offline and online complexity metrics. The performance of models using either complexity-related explicit features or contextualized word embeddings is evaluated, focusing mainly on the neural language model ALBERT (Lan et al. 2020) introduced in Section 2.2. The results highlight how both explicit features and learned representations obtain comparable performances when predicting complexity scores. Finally, the focus is shifted to studying how complexity-related properties are encoded in the representations of ALBERT.

This perspective goes in the direction of exploiting human processing data to address the interpretability issues of unsupervised language representations (Hollenstein, Torre, et al. 2019; Gauthier and Levy 2019; Abnar et al. 2019), leveraging the probing task approach introduced in Section 2.3.1. It is observed that online and offline complexity fine-tuning produces a consequent increase in probing performances for complexity-related features during probing experiments. This investigation has the specific purpose of studying whether and how learning a new task affects the linguistic properties encoded in pretrained representations. While pre-trained models have been widely studied using probing methods, the effect of fine-tuning on encoded information was seldom investigated. To my best knowledge, no previous work has taken into account sentence complexity assessment as a fine-tuning task for NLMs. Results suggest that the model’s abilities during training are interpretable from a linguistic perspective and are possibly related to its predictive capabilities for complexity assessment.

Contributions This is the first work displaying the connection between online and offline complexity metrics and studying how a neural language model represents them. This work:

Provides a comprehensive analysis of linguistic phenomena correlated with eye-tracking data and human perception of complexity, addressing similarities and differences from a linguistically-motivated perspective across metrics and at different levels of granularity;

Compares the performance of models using both explicit features and unsupervised contextual representations when predicting online and offline sentence complexity; and

Shows the natural emergence of complexity-related linguistic phenomena in the representations of language models trained on complexity metrics.16

3.1 Data and Preprocessing

The experiments of this chapter leverage two corpora, each capturing different aspects of linguistic complexity:

Eye-tracking For online complexity metrics, only the monolingual English portion of GECO (Cop et al. 2017), presented in Section 1.3.3, was used. Four online metrics spanning multiple phases of cognitive processing are selected, respectively: first pass duration (FPD), total fixation count (FXC), total fixation duration (TFD) and total regression duration (TRD) (see Table 1.3 for more details). Metrics are sum-aggregated at sentence-level and averaged across participants to obtain a single label for each metric-sentence pair. As a final step to make the corpus more suitable for linguistic complexity analysis, all utterances with fewer than five words, deemed uninteresting from a cognitive processing perspective, are removed.

Perceived Complexity For the offline evaluation of sentence complexity, the English portion of the corpus by Brunato et al. (2018) was used (Section 1.3.2). Sentences in the corpus have uniformly-distributed lengths ranging between 10 and 35 tokens. Each sentence is associated with 20 ratings of perceived-complexity on a 1-to-7 point scale. Duplicates and sentences for which less than half of the annotators agreed on a score in the range \(\mu_n \pm \sigma_n\), where \(\mu_n\) and \(\sigma_n\) are respectively the average and standard deviation of all annotators’ judgments for sentence \(n\) were removed to reduce noise coming from the annotation procedure. Again, scores are averaged across annotators to obtain a single metric for each sentence.

Table 3.1 presents an overview of the two corpora after preprocessing. The resulting eye-tracking (ET) corpus contains roughly four times more sentences than the perceived complexity (PC) one, with shorter words and sentences on average. The differences in sizes and domains between the two corpora account for multi-genre linguistic phenomena in the following analysis.

| Perceived Complexity | Eye-tracking (GECO) | |

|---|---|---|

| labels | PC | FPD, FXC, TFD, TRD |

| domain(s) | financial news | literature |

| aggregation steps | avg. annotators | sentence sum-aggregation + avg. participants |

| filtering steps | filtering by agreement + remove duplicates | min. length > 5 |

| # of sentences | 1115 | 4041 |

| # of tokens | 21723 | 52131 |

| avg. sent. length | 19.48 | 12.9 |

| avg. token length | 4.95 | 4.6 |

| Length-binned subsets (# of sentences) | ||

| Bin 10±1 size | 173 | 899 |

| Bin 15±1 size | 163 | 568 |

| Bin 20±1 size | 164 | 341 |

| Bin 25±1 size | 151 | 215 |

| Bin 30±1 size | 165 | 131 |

| Bin 35±1 size | 147 | 63 |

3.2 Analysis of Linguistic Phenomena

As a first step to investigate the connection between the two complexity paradigms, the correlation of online and offline complexity labels with various linguistic phenomena is evaluated. The Profiling-UD tool (Brunato et al. 2020) introduced in Section 1.2.1 is used to annotate each sentence in our corpora and extract from it ~100 features representing their linguistic structure according to the Universal Dependencies formalism (Nivre et al. 2016). These features capture a comprehensive set of phenomena, from basic information (e.g. sentence and word length) to more complex aspects of sentence structure (e.g. parse tree depth, verb arity), including properties related to sentence complexity at different levels of description. A summary of the most relevant features is presented in Appendix A. Features are ranked using their Spearman’s correlation score with complexity metrics, and scores are leveraged to highlight the relation between linguistic phenomena and complexity paradigms.

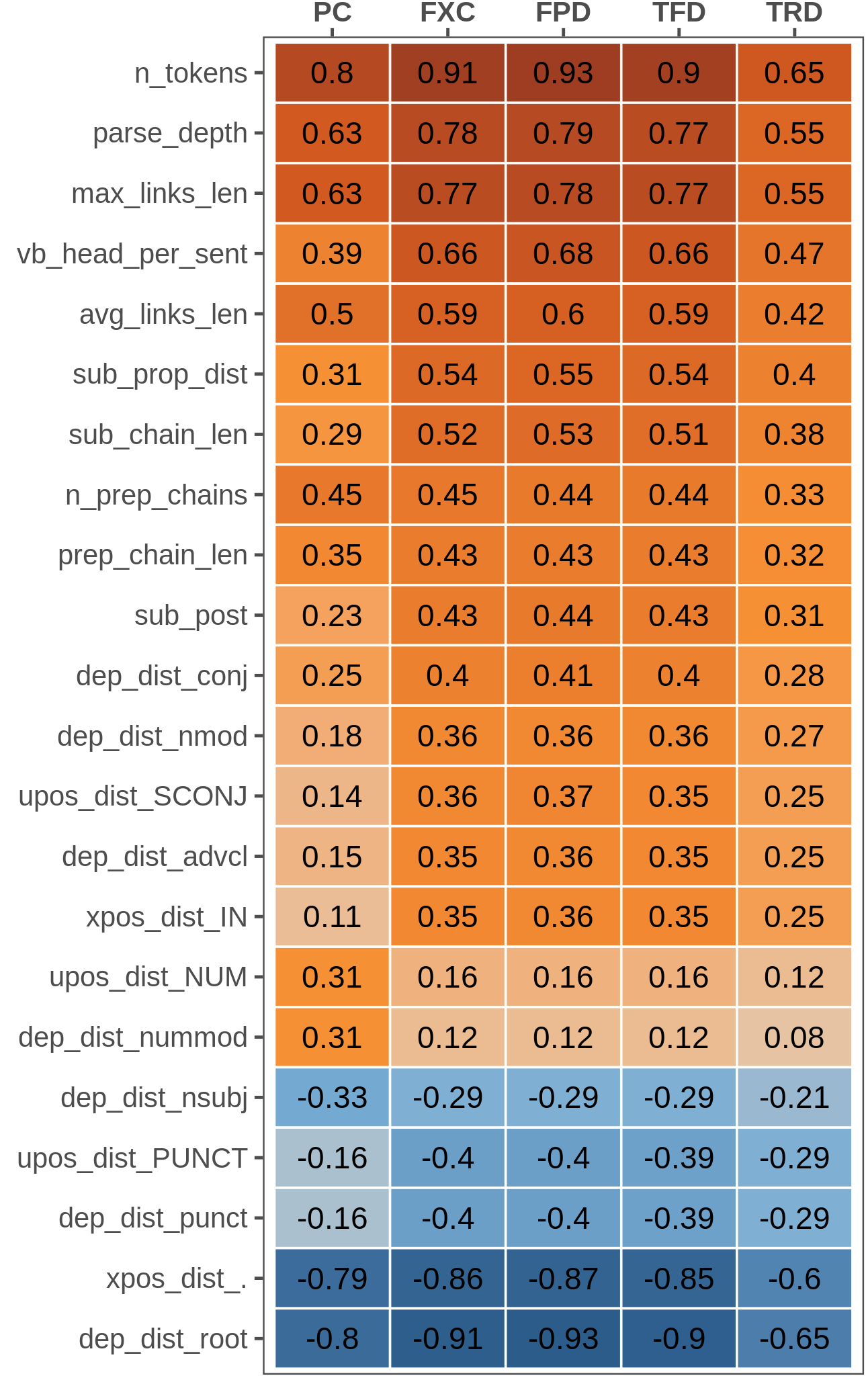

Figure 3.1: Ranking of the most correlated linguistic features for selected metrics. All of Spearman’s correlation coefficients have \(p<0.001\).

The correlation scores analysis highlights how features showing a significant correlation with eye-tracking metrics are twice as many as those correlating with PC scores and generally tend to have higher coefficients, except for the total regression duration (TRD) metric. Nevertheless, the most correlated features are the same across all metrics. Figure 3.1 reports correlation scores for features showing a strong connection (\(|\rho|>0.3\)) with at least one of the evaluated metrics. As expected, sentence length (n_tokens) and other related features capturing structural complexity aspects occupy the top positions in the ranking. Among those, we can note the length of dependency links (max_links_len, avg_links_len) and the depth of the whole parse tree or selected sub-trees, i.e. nominal chains headed by a preposition (parse_depth, n_prep_chains). Similarly, the distribution of subordinate clauses (sub_prop_dist, sub_post) is positively correlated with all metrics but with a more substantial effect for eye-tracking ones, especially in the presence of longer embedded chains (sub_chain_len). Interestingly, the presence of numbers (upos_NUM, dep_nummod) affects only the offline perception of complexity, while it is never strongly correlated with all eye-tracking metrics. This finding is expected since numbers are very short tokens and, like other functional POS, were never found to be strongly correlated with online reading in our results. Conversely, numerical information has been identified as a factor hampering sentence readability and understanding (Rello et al. 2013).

3.2.1 Linguistic Phenomena in Length-controlled Bins

Unsurprisingly, sentence length is the most correlated predictor for all complexity metrics. Since many linguistic features highlighted in our analysis are strongly related to sentence length, we tested whether they maintain a relevant influence when this parameter is controlled. To this end, Spearman’s correlation was computed between features and complexity tasks, but this time considering bins of sentences having approximately the same length. Specifically, we split each corpus into six bins of sentences with 10, 15, 20, 25, 30, and 35 tokens, respectively, with a range of ±1 tokens per bin to select a reasonable number of sentences for our analysis. Resulting subsets have a relatively constant size for the PC corpus, which was constructed ad-hoc to have such uniform length distribution, but have a sharply decreasing size for the eye-tracking corpus (see Table 3.1, bott. While deemed appropriate in the context of this correlation analysis, the disparity in bin sizes may play a significant role in hampering the performances of models trained on binned linguistic complexity data. This perspective is discussed in Section 3.3.

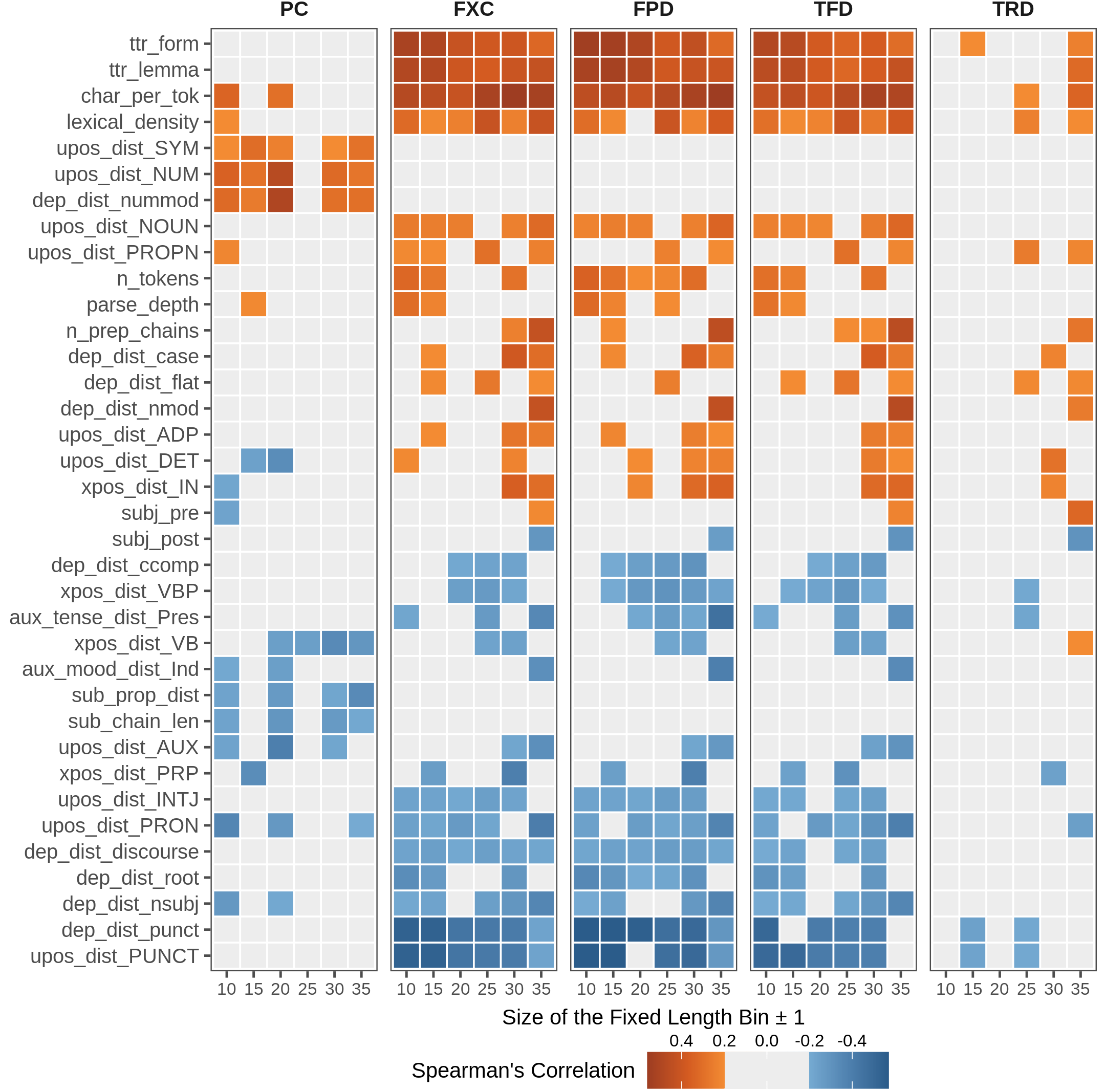

Figure 3.2: Rankings of the most correlated linguistic features for metrics within length-binned subsets of the two corpora. Squares show the correlation between features (left axis) and a complexity metric (top) at a specific bin of length (bottom). Coefficients \(\geq\) 0.2 or \(\leq\) -0.2 are highlighted, and have \(p<0.001\).

Figure 3.2 reports the new rankings of the most correlated linguistic features within each bin across complexity metrics (\(|\rho| > 0.2\)). Again, we observe that features showing a significant correlation with complexity scores are fewer for PC bins than for eye-tracking ones. This fact depends on controlling for sentence length and the small size of bins for the whole dataset. As in the coarse-grained analysis, TRD is the eye-tracking metric less correlated to linguistic features, while the other three (FXC, FPD, TFD) show a homogeneous behavior across bins. For the latter, vocabulary-related features (token-type ratio, average word length, lexical density) are always positive and top-ranked in all bins, especially when considering shorter sentences (i.e. from 10 to 20 tokens). For PC, this is true only for some of them (word length and lexical density). On another note, features encoding numerical information are still highly correlated with the offline perception of complexity in almost all bins.

Interestingly, features modeling subordination phenomena extracted from fixed-length sentences exhibit a reverse trend than when extracted from the whole corpus, i.e. they are negatively correlated with judgments. If, on the one hand, an increase in the presence of subordination for longer sentences (possibly making sentences more convoluted) was expected, on the other hand, when the length is controlled, findings suggest that subordinate structures are not necessarily perceived as a symptom of sentence complexity.

The analysis also highlights how linguistic features relevant to online and offline complexity are different when controlling for sentence length. This aspect, in particular, was not evident from the previous coarse-grained analysis. Despite blocking sentence length, gaze measures are still significantly connected to length-related phenomena (high correlation with n_tokens at various length bins). This observation can be possibly due to the ±1 margin applied for sentence selection and the high sensitivity of behavioral metrics to small input changes.

3.3 Modeling Online and Offline Linguistic Complexity

Given the high correlations reported above, the next step involves quantifying the importance of explicit linguistic features from a modeling standpoint. Table 3.2 presents the RMSE and \(R^2\) scores of predictions made by baselines and models for the selected complexity metrics. Performances are tested with a 5-fold cross-validation regression with a fixed random seed on each metric. Our baselines use average metric scores of all training sentences (Avg. score) and average scores of sentences binned by their length, expressed in number of tokens, as predictions (Bin average). The two linear SVM models leverage explicit linguistic features, using respectively only the n_tokens feature (SVM length) and the whole set of linguistic features presented above (SVM feats). Besides those, the performances of a state-of-the-art Transformer neural language model relying entirely on contextual word embeddings are equally tested. ALBERT (Lan et al. (2020); see Section 2.2) as a lightweight yet effective alternative to BERT (Devlin et al. 2019) for obtaining contextual word representations, using its last-layer [CLS] sentence embedding as input for a linear regressor during fine-tuning and testing. We selected the last layer representations, despite strong evidence on the importance of intermediate representation in encoding language properties, because we aim to investigate how superficial layers encode complexity-related competence. Given the availability of parallel eye-tracking annotations, we train ALBERT using multitask learning with hard parameter sharing (Caruana 1997) on gaze metrics.17

| \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | |

|---|---|---|---|---|---|---|---|---|---|---|

| Statistical baselines | ||||||||||

| Avg. score | 0.87 | 0 | 6.17 | 0.06 | 1078 | 0.06 | 1297 | 0.06 | 540 | 0.03 |

| Bin average | 0.53 | 0.62 | 2.36 | 0.86 | 374 | 0.89 | 532 | 0.85 | 403 | 0.45 |

| Explicit features | ||||||||||

| SVM length | 0.54 | 0.62 | 2.19 | 0.88 | 343 | 0.9 | 494 | 0.86 | 405 | 0.45 |

| SVM feats | 0.44 | 0.74 | 1.77 | 0.92 | 287 | 0.93 | 435 | 0.92 | 400 | 0.46 |

| Learned representations | ||||||||||

| ALBERT | 0.44 | 0.75 | 1.98 | 0.92 | 302 | 0.93 | 435 | 0.9 | 382 | 0.49 |

From Table 3.2 it can be noted that:

The length-binned average baseline is very effective in predicting complexity scores and gaze metrics, which is unsurprising given the extreme correlation between length and complexity metrics presented in Figure 3.1;

The SVM feats model shows considerable improvements if compared to the length-only SVM model for all complexity metrics, highlighting how length alone accounts for much but not for the entirety of variance in complexity scores;

ALBERT performs on-par with the SVM feats model on all complexity metrics despite the small dimension of the fine-tuning corpora and the absence of explicit linguistic information.

A possible interpretation of ALBERT’s strong performances is that the model implicitly develops competence related to phenomena encoded by linguistic features while training on online and offline complexity prediction. We explore this perspective in Section 3.4.

3.3.1 Modeling Complexity in Length-controlled Bins

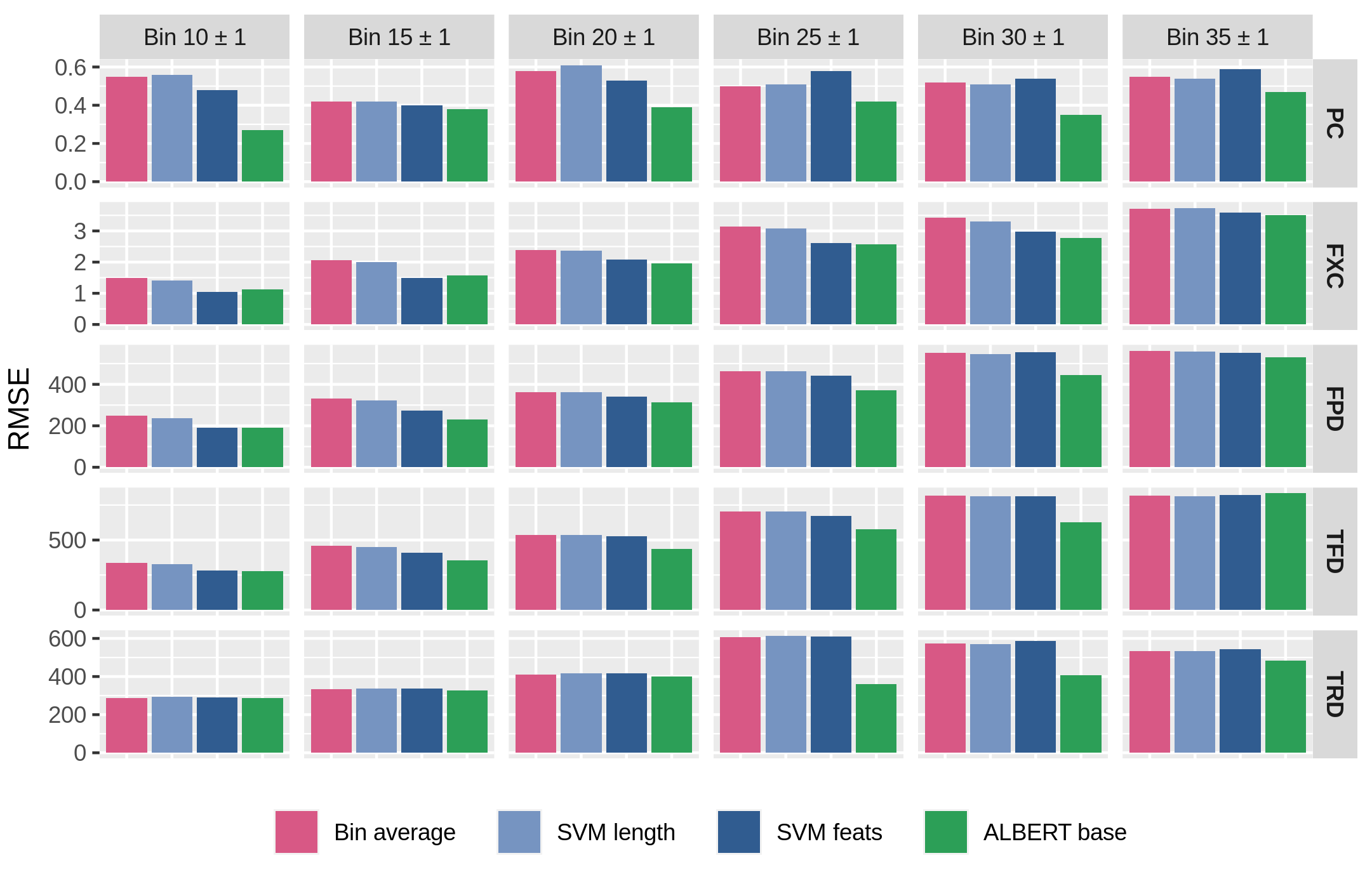

Figure 3.3: Average Root-Mean-Square Error (RMSE) scores for models in Table 3.2, performing 5-fold cross-validation on the length-binned subsets used for Figure 3.2. Lower scores are better.

Similarly to the approach adopted in Section 3.2.1, the performances of models are tested on length-binned data to verify their consistency in the context of length-controlled sequences. Figure 3.3 presents RMSE scores averaged with 5-fold cross-validation over the length-binned sentences subsets for all complexity metrics. It can be observed that ALBERT outperforms the SVM with linguistic features on nearly all bins and metrics, showing the largest gains on intermediate bins for PC and gaze durations (FPD, TFD, TRD). Interestingly, models’ overall performances follow a length-dependent increasing trend for eye-tracking metrics, but not for PC. This behavior can be possibly explained in terms of the high sensibility to length previously highlighted for online metrics, as well as the broad variability in bin dimensions. It can also be observed how the SVM model based on explicit linguistic features (SVM feats) performs poorly on larger bins for all tasks, sometimes being even worse than the bin-average baseline. While this behavior seems surprising given the positive influence of features highlighted in Table 3.2, this phenomenon can be attributed to the small dimension of longer bins, which negatively impacts the generalization capabilities of the regressor. The relatively better scores achieved by ALBERT in those, instead, support the effectiveness of information stored in pretrained language representations when a limited number of examples are available.

3.4 Probing Linguistic Phenomena in ALBERT Representations

As shown in the previous section, ALBERT performances in complexity predictions are comparable to those of an SVM relying on explicit linguistic features and even better than those when controlling for length. The probing task interpretability paradigm (Section 2.3.1) is adopted to investigate if ALBERT encodes the linguistic knowledge that we identified as strongly correlated with online and perceived sentence complexity during training and prediction. In particular, the aim of this investigation is two-fold:

Probing ALBERT’s innate competence in relation to the broad spectrum of linguistic features described in Appendix A; and

Verifying whether, and in which respect, this competence is affected by a fine-tuning process on the complexity assessment metrics.

Three UD English treebanks spanning different textual genres – EWT, GUM, and ParTUT respectively by Silveira et al. (2014), Zeldes (2017), and Sanguinetti and Bosco (2015) – were aggregated, obtaining a final corpus of 18,079 sentences with gold linguistic information which was used to conduct probing experiments. The Profiling-UD tool was again leveraged to extract \(n\) sentence-level linguistic features \(\mathcal{Z}=z_1, \dots, z_n\) from gold linguistic annotations. Representations \(A(x)\) were generated for all corpus sentences using the last-layer [CLS] embedding of a pretrained ALBERT base model without additional fine-tuning, and \(n\) single-layer perceptron regressors \(g_i: A(x) \rightarrow z_i\) are trained to map representations \(A(x)\) to each linguistic feature \(z_i\). Finally, the error and \(R^2\) scores of each \(g_i\) were evaluated as proxies for the quality of representations \(A(x)\) in encoding their respective linguistic feature \(z_i\). The same evaluation is repeated for ALBERTs fine-tuned respectively on perceived complexity labels (PC) and on all eye-tracking labels with multitask learning (ET), averaging scores with 5-fold cross-validation. A selected subset of results is shown on the left side of Table 3.3.

| \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | \(\sqrt{E^2}\) | \(R^2\) | |

|---|---|---|---|---|---|---|---|---|---|---|

| n_tokens | 8.19 | 0.26 | 4.66 | 0.76 | 2.87 | 0.91 | 8.66 | 0.18 | 6.71 | 0.51 |

| parse_depth | 1.47 | 0.18 | 1.18 | 0.48 | 1.04 | 0.6 | 1.50 | 0.16 | 1.22 | 0.43 |

| vb_head_per_sent | 1.38 | 0.15 | 1.26 | 0.3 | 1.14 | 0.42 | 1.44 | 0.09 | 1.30 | 0.25 |

| xpos_dist_. | 0.05 | 0.13 | 0.04 | 0.41 | 0.04 | 0.42 | 0.04 | 0.18 | 0.04 | 0.38 |

| avg_links_len | 0.58 | 0.12 | 0.53 | 0.29 | 0.52 | 0.31 | 0.59 | 0.1 | 0.56 | 0.2 |

| max_links_len | 5.20 | 0.12 | 4.08 | 0.46 | 3.75 | 0.54 | 5.24 | 0.11 | 4.73 | 0.28 |

| n_prep_chains | 0.74 | 0.11 | 0.67 | 0.26 | 0.66 | 0.29 | 0.72 | 0.14 | 0.69 | 0.21 |

| sub_prop_dist | 0.35 | 0.09 | 0.33 | 0.13 | 0.31 | 0.22 | 0.34 | 0.05 | 0.32 | 0.15 |

| upos_dist_PRON | 0.08 | 0.09 | 0.08 | 0.14 | 0.08 | 0.07 | 0.07 | 0.23 | 0.08 | 0.15 |

| pos_dist_NUM | 0.05 | 0.08 | 0.05 | 0.06 | 0.05 | 0.02 | 0.05 | 0.16 | 0.05 | 0.06 |

| dep_dist_nsubj | 0.06 | 0.08 | 0.06 | 0.1 | 0.06 | 0.05 | 0.05 | 0.17 | 0.06 | 0.11 |

| char_per_tok | 0.89 | 0.07 | 0.87 | 0.12 | 0.90 | 0.05 | 0.82 | 0.22 | 0.86 | 0.14 |

| prep_chain_len | 0.60 | 0.07 | 0.57 | 0.17 | 0.56 | 0.19 | 0.59 | 0.12 | 0.56 | 0.18 |

| sub_chain_len | 0.70 | 0.07 | 0.67 | 0.15 | 0.62 | 0.26 | 0.71 | 0.04 | 0.66 | 0.16 |

| dep_dist_punct | 0.07 | 0.06 | 0.07 | 0.06 | 0.07 | 0.14 | 0.07 | 0.06 | 0.07 | 0.14 |

| dep_dist_nmod | 0.05 | 0.06 | 0.05 | 0.07 | 0.05 | 0.06 | 0.05 | 0.09 | 0.05 | 0.09 |

| sub_post | 0.44 | 0.05 | 0.46 | 0.12 | 0.44 | 0.18 | 0.47 | 0.05 | 0.45 | 0.14 |

| dep_dist_case | 0.07 | 0.05 | 0.06 | 0.06 | 0.07 | 0.08 | 0.07 | 0.07 | 0.07 | 0.1 |

| lexical_density | 0.14 | 0.05 | 0.13 | 0.03 | 0.13 | 0.03 | 0.13 | 0.13 | 0.13 | 0.13 |

| dep_dist_compound | 0.06 | 0.04 | 0.06 | 0.05 | 0.06 | 0.03 | 0.06 | 0.1 | 0.06 | 0.07 |

| dep_dist_conj | 0.04 | 0.03 | 0.04 | 0.04 | 0.04 | 0.04 | 0.05 | 0.02 | 0.04 | 0.03 |

| ttr_form | 0.08 | 0.03 | 0.08 | 0.05 | 0.08 | 0.05 | 0.08 | 0.05 | 0.08 | 0.05 |

| dep_dist_det | 0.06 | 0.03 | 0.06 | 0.02 | 0.06 | 0.04 | 0.06 | 0.03 | 0.06 | 0.03 |

| dep_dist_aux | 0.04 | 0.02 | 0.04 | 0.01 | 0.04 | 0.01 | 0.04 | 0.06 | 0.04 | 0.04 |

| pos_dist_VBN | 0.03 | 0.01 | 0.03 | 0 | 0.03 | 0 | 0.03 | 0.01 | 0.03 | 0 |

| xpos_dist_VBZ | 0.04 | 0.01 | 0.04 | 0.01 | 0.04 | 0.02 | 0.04 | 0.02 | 0.04 | 0.02 |

| ttr_lemma | 0.09 | 0.01 | 0.09 | 0.06 | 0.09 | 0.06 | 0.09 | 0.04 | 0.09 | 0.03 |

As it can be observed, ALBERT’s last-layer sentence representations have relatively low knowledge of complexity-related probes, but their performances highly increase after fine-tuning. Specifically, a noticeable improvement was obtained on features that were already better encoded in base pretrained representation, i.e. sentence length and related, suggesting that fine-tuning possibly accentuates only properties already well-known by the model, regardless of the target task. To verify that this isn’t the case, the same probing tests were repeated on ALBERT models fine-tuned on the smallest length-binned subset (i.e. \(10\pm1\) tokens) presented in previous sections. The right side of Table 3.3 presents the resulting scores. From the length-binned correlation analysis of Section 3.2, PC scores were observed to be mostly uncorrelated with length phenomena, while ET scores remain significantly affected despite our controlling of sequence size. This observation also holds for length-binned probing task results, where the PC model seems to neglect length-related properties in favor of task-specific ones that were also highlighted in our fine-grained correlation analysis (e.g. word length, numbers, explicit subjects). The ET-trained model follows the same behavior, retaining strong but lower performances for length-related features.

In conclusion, although higher probing task performances after fine-tuning are not direct proof that the neural language model exploits newly-acquired morpho-syntactic and syntactic information, results suggest that training on tasks strongly connected with underlying linguistic structures triggers a change in model representations resulting in a better encoding of related linguistic properties.

3.5 Summary

In this chapter, the connection between eye-tracking metrics and the offline perception of sentence complexity was investigated from an experimental standpoint. An in-depth correlation analysis was performed between complexity scores and sentence linguistic properties at different granularity levels, highlighting the strong relationship between metrics and length-affine properties and revealing different behaviors when controlling for sentence length. Models using explicit linguistic features and unsupervised word embeddings were evaluated on complexity prediction, showing comparable performances across metrics. Finally, the encoding of linguistic properties in a neural language model’s contextual representations was tested with probing tasks. This approach highlighted the natural emergence of task-related linguistic properties within the model’s representations after the fine-tuning process. Thus, it can be conjectured that a relation subsists between the model’s linguistic abilities during the training procedure and its downstream performances on morphosyntactically-related tasks and that linguistic probes may provide a reasonable estimate of the task-oriented quality of representations.

References

Abnar, Samira, Lisa Beinborn, Rochelle Choenni, and Willem Zuidema. 2019. “Blackbox Meets Blackbox: Representational Similarity & Stability Analysis of Neural Language Models and Brains.” In Proceedings of the 2019 Acl Workshop Blackboxnlp: Analyzing and Interpreting Neural Networks for Nlp, 191–203. Florence, Italy: Association for Computational Linguistics. https://doi.org/10.18653/v1/W19-4820.

Brunato, Dominique, Andrea Cimino, Felice Dell’Orletta, Giulia Venturi, and Simonetta Montemagni. 2020. “Profiling-UD: A Tool for Linguistic Profiling of Texts.” In Proceedings of the 12th Language Resources and Evaluation Conference, 7145–51. Marseille, France: European Language Resources Association. https://www.aclweb.org/anthology/2020.lrec-1.883.

Brunato, Dominique, Lorenzo De Mattei, Felice Dell’Orletta, Benedetta Iavarone, and Giulia Venturi. 2018. “Is This Sentence Difficult? Do You Agree?” In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, 2690–9. Brussels, Belgium: Association for Computational Linguistics. https://doi.org/10.18653/v1/D18-1289.

Caruana, Rich. 1997. “Multitask Learning.” Machine Learning 28: 41–75. https://www.cs.utexas.edu/~kuipers/readings/Caruana-mlj-97.pdf.

Cop, Uschi, Nicolas Dirix, Denis Drieghe, and Wouter Duyck. 2017. “Presenting Geco: An Eyetracking Corpus of Monolingual and Bilingual Sentence Reading.” Behavior Research Methods 49 (2). Springer: 602–15.

Devlin, Jacob, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. “BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding.” In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), 4171–86. Minneapolis, Minnesota: Association for Computational Linguistics. https://doi.org/10.18653/v1/N19-1423.

Gauthier, Jon, and Roger Levy. 2019. “Linking Artificial and Human Neural Representations of Language.” In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (Emnlp-Ijcnlp), 529–39. Hong Kong, China: Association for Computational Linguistics. https://doi.org/10.18653/v1/D19-1050.

Hollenstein, Nora, Antonio de la Torre, Nicolas Langer, and Ce Zhang. 2019. “CogniVal: A Framework for Cognitive Word Embedding Evaluation.” In Proceedings of the 23rd Conference on Computational Natural Language Learning (Conll), 538–49. Hong Kong, China: Association for Computational Linguistics. https://doi.org/10.18653/v1/K19-1050.

Lan, Zhenzhong, Mingda Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma, and Radu Soricut. 2020. “ALBERT: A Lite BERT for Self-Supervised Learning of Language Representations.” In International Conference on Learning Representations. https://openreview.net/forum?id=H1eA7AEtvS.

Nivre, Joakim, Marie-Catherine de Marneffe, Filip Ginter, Yoav Goldberg, Jan Hajič, Christopher D. Manning, Ryan McDonald, et al. 2016. “Universal Dependencies V1: A Multilingual Treebank Collection.” In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), 1659–66. Portorož, Slovenia: European Language Resources Association (ELRA). https://www.aclweb.org/anthology/L16-1262.

Rello, Luz, Susana Bautista, Ricardo Baeza-Yates, Pablo Gervás, Raquel Hervás, and Horacio Saggion. 2013. “One Half or 50%? An Eye-Tracking Study of Number Representation Readability.” In Human-Computer Interaction – Interact 2013, edited by Paula Kotzé, Gary Marsden, Gitte Lindgaard, Janet Wesson, and Marco Winckler, 229–45. Berlin, Heidelberg: Springer Berlin Heidelberg.

Sanguinetti, Manuela, and Cristina Bosco. 2015. “PartTUT: The Turin University Parallel Treebank.” In Harmonization and Development of Resources and Tools for Italian Natural Language Processing Within the Parli Project, edited by Roberto Basili, Cristina Bosco, Rodolfo Delmonte, Alessandro Moschitti, and Maria Simi, 51–69. Cham: Springer International Publishing. https://doi.org/10.1007/978-3-319-14206-7\_3.

Silveira, Natalia, Timothy Dozat, Marie-Catherine de Marneffe, Samuel Bowman, Miriam Connor, John Bauer, and Chris Manning. 2014. “A Gold Standard Dependency Corpus for English.” In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14), 2897–2904. Reykjavik, Iceland: European Language Resources Association (ELRA). http://www.lrec-conf.org/proceedings/lrec2014/pdf/1089_Paper.pdf.

Zeldes, Amir. 2017. “The GUM Corpus: Creating Multilayer Resources in the Classroom.” Language Resources and Evaluation 51: 581–612.

Code available at https://github.com/gsarti/interpreting-complexity↩

Training procedure and parameters are thoroughly described in Appendix F.↩